For ages, computer systems have tried to imitate the human mind and its sense of intelligence.

The segue of synthetic neural networks dates again to the Fifties. Engineers have been fascinated by fast and on-the-point decision-making because the starting of time and have strived to copy this in computer systems. This later took form as neural community studying or deep studying. One particular department, synthetic neural community software program, has been used considerably for machine translation and sequence prediction duties.

Find out how computer systems bridged the hole between the mind reflexes of people and fueled this energy inside computer systems to improvise language translations, conversations, sentence modifications, and textual content summarization. Often called synthetic neural community, now computer systems may perceive phrases and interpret them like people.

Preserve studying to search out out how one can deploy synthetic neural community software program to energy contextual understanding inside your enterprise functions and simulate human actions through computer systems.

What’s a man-made neural community?

A man-made neural community, also referred to as a neural community, is a univariate and complex deep studying mannequin that replicates the organic functioning of the human mind. It replicates the central nervous system mechanism. Every enter sign is taken into account to be impartial of the following enter sign. So, enter knowledge that exists between two nodes doesn’t have any relationship.

The neural community is used for sequential processing as a result of it predicts output primarily based on the character of every particular person enter node.

When a dozen phrases like synthetic intelligence, machine studying, deep studying, and neural networks, it’s simple to get confused. Nevertheless, the precise distinction between these synthetic intelligence phrases just isn’t that difficult.

How does a man-made neural community work?

The reply is strictly how the media defines it. A man-made neural community is a system of information processing and output technology that replicates the neural system to unravel non-linear relations in a big dataset. The info would possibly come from sensory routes and could be within the type of textual content, photos, or audio.

One of the best ways to know how a man-made neural community works is by understanding how a pure neural community contained in the mind works and drawing a parallel between them. Neurons are the elemental element of the human mind and are accountable for studying and retention of information and knowledge as we all know it. You may think about them the processing unit within the mind. They take the sensory knowledge as enter, course of it, and provides the output knowledge utilized by different neurons. The data is processed and handed till a decisive end result is attained.

A man-made neural community is predicated on a human mind mechanism. The identical scientific course of is used to extract responses from the factitious neural community software program and generate output.

Construction of the essential neural community

The fundamental neural community within the mind is related by synapses. You may visualize them as the tip nodes of a bridge that connects two neurons. So, the synapse is the assembly level for 2 neurons. Synapses are an necessary a part of this method as a result of the power of a synapse determines the depth of understanding and the retention of data.

Supply: neuraldump.web

All of the sensory knowledge that your mind collects in actual time is processed via these neural networks. They’ve a degree of origination within the system. As they’re processed by the preliminary neurons, the processed type of an electrical sign popping out of 1 neuron turns into the enter for one more neuron. This micro-information processing at every layer of neurons is what makes this community efficient and environment friendly.

By replicating this recurring theme of knowledge processing throughout the neural community, ANNs can produce superior outputs.

Working methodology of synthetic neural community

In an ANN, every little thing is designed to copy this very course of. Don’t fear in regards to the mathematical equation. That’s not the important thing thought to be understood proper now. All the information entered with the label ‘X’ within the system has a weight of ‘W’ to generate a weighted sign. This replicates the function of a synaptic sign’s power within the mind. The bias variable is hooked up to regulate the outcomes of the output from the operate.

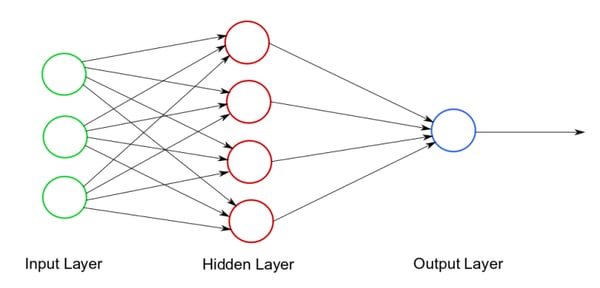

So, all of this knowledge is processed within the operate, and you find yourself with an output. That’s what a one-layer neural community or a perceptron would appear to be. The thought of a man-made neural community revolves round connecting a number of mixtures of such synthetic neurons to get stronger outputs. That’s the reason the standard synthetic neural community’s conceptual framework seems so much like this:

Supply: KDnuggets

We’ll quickly outline these layers as we discover how a man-made neural community capabilities. However for a rudimentary understanding of a man-made neural community, you recognize the primary rules now.

This mechanism is used to decipher giant datasets. The output typically tends to be an institution of causality between the variables entered as enter that can be utilized for forecasting. Now that you recognize the method, you may absolutely respect the technical definition right here:

“Synthetic neural community is a community modeled after the human mind by creating a man-made neural system through a sample recognizing laptop algorithm that learns from, interprets and classifies sensory knowledge”

Synthetic neural networks coaching course of

Brace your self; issues are about to get attention-grabbing right here. And don’t fear – you don’t must do a ton of math proper now.

Activation operate

The magic occurs first on the activation operate. The activation operate does preliminary processing to find out whether or not the neuron can be activated or not. If the neuron just isn’t activated, its output would be the identical as its enter. Nothing occurs then. That is crucial to have within the neural community, in any other case, the system can be pressured to course of a ton of data that has no affect on the output. You see, the mind has restricted capability but it surely has been optimized to make use of it to the very best.

Non Linearity

One central property widespread throughout all synthetic neural networks is the idea of non-linearity. Most variables studied possess a non-linear relationship in actual life.

Take, as an example, the value of chocolate and the variety of sweets. Assume that one chocolate prices $1. How a lot would 100 sweets value? In all probability $100. How a lot would 10,000 sweets value? Not $10,000; both the vendor will add the price of utilizing additional packaging to place all of the sweets collectively, or she’s going to cut back the associated fee since you’re transferring a lot of her stock off her palms in a single go. That’s the idea of non-linearity.

Kinds of activation capabilities

An activation operate will use primary mathematical rules to find out whether or not the data is to be processed or not. The commonest types of activation capabilities are the Binary Step Perform, Logistic Perform, Hyperbolic Tangent Perform, and Rectified Linear Items. Right here’s the essential definition of every one among these:

- Binary step operate: This operate prompts a neuron primarily based on a threshold. If the operate’s finish result’s above or beneath a benchmarked worth, the neuron is activated.

- Logistic operate: This operate has a mathematical finish outcome within the form of an ‘S’ curve and is used when chances are the important thing standards to find out whether or not the neuron must be activated. So, at any level, you may calculate the slope of this curve. The worth of this operate lies between 0 and 1.

- Differential operate: The slope is calculated utilizing a differential operate. The idea is used when two variables don’t have a linear relationship. The slope is the worth of a tangent that touches the curve on the actual level the place the nonlinearity kicks in. The issue with the logistic operate is that it’s not good for processing info with destructive values.

- Hyperbolic tangent operate: It’s fairly much like the logistic operate, besides its values fall between -1 and +1. So, the issue of a destructive worth not being processed within the community goes away.

- Rectified linear items (ReLu): This operate’s values lie between 0 and constructive infinity. ReLu simplifies a number of issues – if the enter is constructive, it should give the worth of ‘x’. For all different inputs, the worth can be ‘0’. You should utilize a Leaky ReLu that has values between destructive infinity and constructive infinity. It’s used when the connection between the variables being processed is de facto weak and would possibly get omitted by the activation operate altogether.

Hidden layer

Have a look at the identical two diagrams of a perceptron and a neural community. What’s the distinction, aside from the variety of neurons? The important thing distinction is the hidden layer. A hidden layer sits proper between the enter layer and the output layer in a neural community. Its job is to refine the processing and remove variables that won’t have a robust affect on the output.

If there are a lot of cases in a dataset the place the affect of the change within the worth of an enter variable is noticeable on the output variable, the hidden layer will present that relationship. The hidden layer makes it simple for the ANN to present out stronger alerts to the following layer of processing.

The training interval

Even after doing all this math and understanding how the hidden layer operates, you could be questioning how a man-made neural community truly learns.

Studying, within the easiest phrases, is establishing causality between two issues (actions, processes, variables, and so forth.). This causality could be troublesome to ascertain. Correlation doesn’t equal causation. Whether it is troublesome to grasp which variable impacts the opposite one. How does an ANN algorithm perceive this?

This may be carried out mathematically. The causality is the squared distinction between the dataset’s precise worth and its output worth. You too can think about the diploma of error. We sq. it as a result of generally the distinction will be destructive.

You may model every cycle of input-to-output processing with the associated fee operate. Your and the ANN’s job is to reduce the associated fee operate to its lowest potential worth. You obtain it by adjusting the weights within the ANN. (weights are the numeric thresholds given to every enter token to justify its affect on the remainder of the sentence). There are a number of methods of doing this, however so far as you perceive the precept, you’d simply be utilizing totally different instruments to execute it.

With every cycle, we purpose to reduce the associated fee operate. The method of going from enter to output known as ahead propagation. The method of utilizing output knowledge to reduce the associated fee operate by adjusting weight in reverse order from the final hidden layer to the enter layer known as backpropagation via time (BPTT).

You may preserve adjusting these weights utilizing both the Brute Power technique, which renders inefficient when the dataset is just too huge, or the Batch-Gradient Descent, which is an optimization algorithm. Now, you have got an intuitive understanding of how a man-made neural community learns.

Recurrent neural networks (RNN) vs. convolutional neural networks (CNN)

Understanding these two types of neural networks can be your introduction to 2 totally different sides of AI utility – laptop imaginative and prescient and pure language processing. Within the easiest kind, these two branches of AI assist a machine interpret textual content sequences and label the parts of a picture.

-png.png?width=600&height=375&name=_containers%20as%20a%20service%20vs%20infrastructure%20as%20a%20service%20(6)-png.png)

Convolutional neural networks are ideally used for laptop imaginative and prescient processes in synthetic intelligence techniques. These networks are used to research picture options and interpret their vector positions to label the picture or any element inside the picture. Other than the widely used neural activation capabilities, they add a pooling operate and a convolution operate. A convolution operate, in less complicated phrases, would present how the enter of 1 picture and an enter of a second picture (a filter) will end in a 3rd picture (the outcome). That is also referred to as characteristic mapping. You may think about this by visualizing it as a filtered picture (a brand new set of pixel values) sitting on high of your enter picture (unique set of pixel values) to get a ensuing picture (modified pixel values). The picture is fed to a assist vector machine that classifies the class of the picture.

Recurrent neural networks, or RNNs, set up the connection between components of the textual content sequence to clear the context and generate potent output. It’s designed for sequential knowledge, the place connections between phrases can kind a directed graph alongside the temporal sequence. This permits RNN to retain info from earlier enter whereas working with present enter. The earlier enter from the hidden layer is fed to the following hidden layer on the present time step together with the brand new enter phrase, which is equipped to the identical layer. This mechanism makes RNN well-suited for duties equivalent to language modeling, speech recognition, and time collection prediction. By sustaining a type of reminiscence via hidden states, RNNs can successfully seize patterns and dependencies in sequences, permitting for the processing of enter sequences of various lengths.

5 functions of synthetic neural networks

What we’ve talked about up to now was all occurring beneath the hood. Now we will zoom out and see these ANNs in motion to totally respect their bond with our evolving world:

1. Personalize suggestions on e-commerce platforms

One of many earliest functions of ANNs has been personalizing eCommerce platform experiences for every person. Do you bear in mind the actually efficient suggestions on Netflix? Or the just-right product strategies on Amazon? They’re a results of the ANN.

There’s a ton of information getting used right here: your previous purchases, demographic knowledge, geographic knowledge, and the information that exhibits what individuals shopping for the identical product purchased subsequent. All of those function the inputs to find out what would possibly give you the results you want. On the identical time, what you actually purchase helps the algorithm get optimized. With each buy, you’re enriching the corporate and the algorithm that empowers the ANN. On the identical time, each new buy made on the platform may also enhance the algorithm’s prowess in recommending the precise merchandise to you.

2. Harnessing pure language processing for conversational chatbots

Not way back, chatboxes had began choosing up steam on web sites. An agent would sit on one aspect and show you how to out along with your queries typed within the field. Then, a phenomenon known as pure language processing (NLP) was launched to chatbots, and every little thing modified.

NLP typically makes use of statistical guidelines to copy human language capacities and, like different ANN functions, will get higher with time. Your punctuations, intonations and enunciations, grammatical decisions, syntactical decisions, phrase and sentence order, and even the language of selection can function inputs to coach the NLP algorithm.

The chatbot turns into conversational by utilizing these inputs to know the context of your queries and formulate solutions that finest fit your type. The identical NLP can also be getting used for audio enhancing in music and safety verification functions.

3. Predicting outcomes of a high-profile occasion

Most of us observe the result predictions being made by AI-powered algorithms through the presidential elections in addition to the FIFA World Cup. Since each occasions are phased, it helps the algorithm shortly perceive its efficacy and decrease the associated fee operate as groups and candidates get eradicated. The true problem in such conditions is the diploma of enter variables. From candidates to participant stats to demographics to anatomical capabilities – every little thing must be integrated.

In inventory markets, predictive algorithms that use ANNs have been round for some time now. Information updates and monetary metrics are the important thing enter variables used. Because of this, most exchanges and banks are simply capable of commerce property below high-frequency buying and selling initiatives at speeds that far exceed human capabilities.

The issue with inventory markets is that the information is at all times noisy. Randomness may be very excessive as a result of the diploma of subjective judgment, which might affect the value of a safety, may be very excessive. However, each main financial institution today is utilizing ANNs in market-making actions.

4. Credit score sanctions

ANNs are used to calculate credit score scores, sanction loans, and decide threat components related to candidates registering for credit score.

All lenders can analyze buyer knowledge with strongly established weights and use the data to find out the candidate’s threat profile related to mortgage utility. Your age, gender, metropolis of residence, college of commencement, trade of engagement, wage, and financial savings ratio are all used as inputs to find out your credit score threat scores.

What was earlier closely dependent in your particular person credit score rating has now turn out to be a way more complete mechanism. That’s the reason why a number of personal fintech gamers have jumped into the private loans house to run the identical ANNs and lend to individuals whose profiles are thought of too dangerous by banks.

5. Self-driving vehicles

Tesla, Waymo, and Uber have been utilizing ANNs of their engine mechanisms. Along with ANN, they use different methods, equivalent to object recognition, to construct subtle and clever self-driving vehicles.

A lot of self-driving has to do with processing info that comes from the actual world within the type of close by autos, highway indicators, pure and synthetic lights, pedestrians, buildings, and so forth. Clearly, the neural networks powering these self-driving vehicles are extra difficult than those we mentioned right here, however they do function on the identical rules that we expounded.

Greatest synthetic neural community software program in 2024

Primarily based on G2 person critiques, we’ve got curated an inventory of high 5 synthetic neural community software program for companies in 2024. These software program will assist optimize knowledge engineering and knowledge improvement workflows whereas including clever options and advantages to your core product area.

1. Google Cloud Deep Studying VM Picture

Google Cloud Deep Studying VM Picture can optimize high-performance digital machines pre-configured particularly for deep studying duties. These VMs are pre-installed with the instruments you want, together with fashionable frameworks like TensorFlow and PyTorch, important libraries, and NVIDIA CUDA/cuDNN assist to leverage your GPU for quicker processing. This minimizes the time you spend organising your atmosphere and allows you to soar proper in. Deep Studying VM Photographs additionally combine seamlessly with Vertex AI, permitting you to handle coaching and allocate assets cost-effectively.

What customers like finest:

“You may shortly provision a VM with every little thing you want in your deep studying undertaking on Google Cloud. Deep Studying VM Picture makes it easy and fast to create a VM picture containing all the preferred.”

– Google Cloud Deep Studying VM Picture Evaluation, Ramcharn H.

What customers dislike:

“The training curve to make use of the software program is kind of steep.”

–Google Cloud Deep Studying VM Picture Evaluation, Daniel O.

2. Microsoft Cognitive Toolkit (Previously CNTK)

The Microsoft Cognitive Toolkit (previously CNTK) empowers companies to optimize knowledge workflows and cut back analytics expenditure via a high-performance, open-source deep studying framework. CNTK leverages computerized differentiation and parallel GPU/server execution to coach complicated neural networks effectively, enabling quicker improvement cycles and cost-effective deployment of AI options for knowledge evaluation.

What customers like finest:

“Most useful characteristic is straightforward navigation and low code for mannequin creation. Any novice can simply perceive the platform and create fashions simply. Help for numerous libraries for various languages makes it stand out! Nice product in comparison with Google AutoML.”

– Microsoft Cognitive Toolkit (Previously CNTK), Anubhav I.

What customers dislike:

“Much less management to customise the providers to our necessities and buggy updates to CNTK SDKs which generally breaks the manufacturing code.”

–Microsoft Cognitive Toolkit (Previously CNTK), Chinmay B.

3. AIToolbox

AIToolbox leverages a high-performance, modular synthetic neural community structure for scalable knowledge processing. It simplifies complicated duties and consists of newest superior machine studying methods like Tensorflow, Help Vector Machine, Regression, Exploratory Knowledge Evaluation and Principal Part Evaluation in your groups to generate forecasts and predictions. Our framework empowers companies to streamline workflows, optimize characteristic engineering, and cut back computational overhead related to conventional analytics, finally delivering cost-effective AI-powered insights.

What customers like finest:

“Aitoolbox provides many tutorials, article and guides that assist find out about new applied sciences. Aitoolbox supplies entry to numerous ai and ml instruments and libraries, making it simpler for person to implement and experimental with new applied sciences.aitoolbox is designed to be person pleasant with options that make it simple for customers entry and use the platform.”

– AIToolbox Critiques, Hem G.

What customers dislike:

“AIToolbox encounters limitations inside its particular context. Consequently, there exists a deficiency on the whole instinct, and the AI’s precision just isn’t at all times absolute. Feelings and safety measures are absent from its functionalities.”

–AIToolbox Evaluation, Saurabh B.

4. Caffe

Caffe provides a high-throughput, open-source deep studying framework for fast prototyping and deployment. Its modular structure helps various neural community topologies (CNNs, RNNs) and integrates with fashionable optimization algorithms (SGD, Adam) for environment friendly coaching on GPUs and CPUs. This empowers companies to discover cost-effective AI options for knowledge evaluation and mannequin improvement.

What customers like finest:

“The upsides of utilizing Caffe are its pace, flexibility, and scalability. It’s extremely quick and environment friendly, permitting you to shortly design, prepare, and deploy deep neural networks. It supplies a variety of helpful instruments and libraries, making it simpler to create complicated fashions and to customise present ones. Lastly, Caffe may be very scalable, permitting you to simply scale up your fashions to giant datasets or to a number of machines, making it a great selection for distributed coaching.”

– Caffe Evaluation, Ruchit S.

What customers dislike:

“Being in analysis division and doing extra of deep studying work on photos , I’d require openCL which continues to be want so as to add extra options. So I would like to modify to different software program for that some. Could be higher if it has openCl options added.”

–Caffe Evaluation, Sonali S.

5. Synaptic.js

Synaptic.js is a open supply code editor which supplies software program library assist for node.js, Python, R and different programming languages. This software is predicated on synthetic neural community structure and helps you prepare and take a look at your knowledge easily with easy purposeful calls. The combination of Synaptic.js along with your pocket book permits for clean deployment and supply of neural networks.

What customers like finest:

“It is extremely simple to construct a neural community in Javascript by making use of Synaptic.js. It consists of built-in architectures like multilayer perceptron, Hopfield networks, and so forth. Additionally, there aren’t many different libraries on the market that help you construct a second order community.”

– Synaptic.js Evaluation, Sameem S.

What customers dislike:

“Not appropriate for a big neural community. It wants extra work on the documentation; among the hyperlinks on the readme are damaged.”

–Synaptic.js, Chetan D.

Creating “synthetic mind passers” for computer systems

ANNs are getting an increasing number of subtle day-to-day. ANN-powered NLP instruments are actually serving to in early psychological well being prognosis, medical imaging, and drone supply. As ANNs turn out to be extra complicated and superior, the necessity for human intelligence on this system will turn out to be much less. Even areas like design have began deploying AI options with generative design.

Find out how the way forward for synthetic intelligence, which is synthetic basic intelligence, will set the stage for huge industrial automation and AI globalization.

This text was initially revealed in 2020. It has been up to date with new info.