A brand new buzzword is making waves within the tech world, and it goes by a number of names: giant language mannequin optimization (LLMO), generative engine optimization (GEO) or generative AI optimization (GAIO).

At its core, GEO is about optimizing how generative AI purposes current your merchandise, manufacturers, or web site content material of their outcomes. For simplicity, I’ll check with this idea as GEO all through this text.

I’ve beforehand explored whether or not it’s doable to form the outputs of generative AI methods. That dialogue was my preliminary foray into the subject of GEO.

Since then, the panorama has developed quickly, with new generative AI purposes capturing vital consideration. It’s time to delve deeper into this fascinating space.

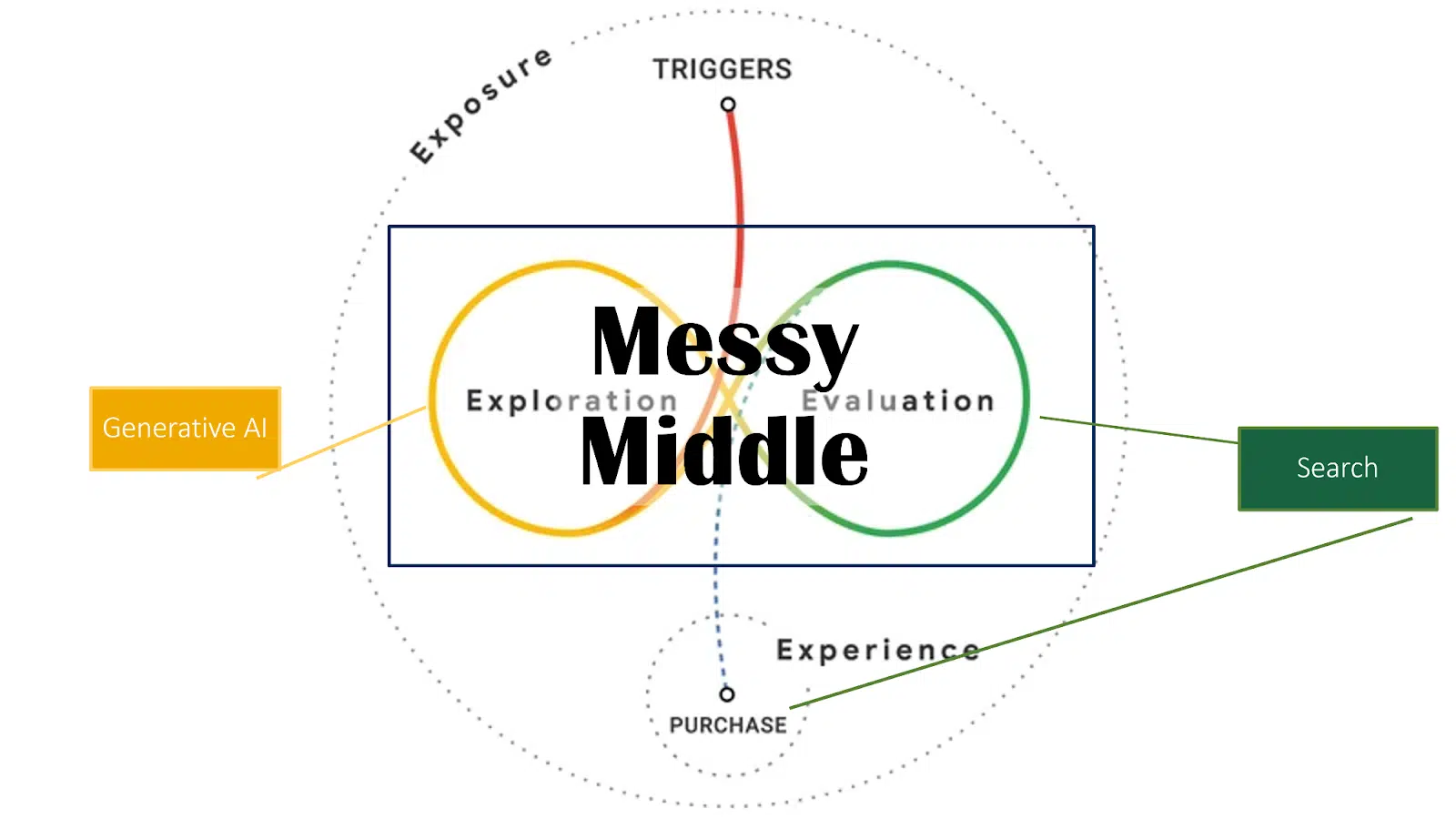

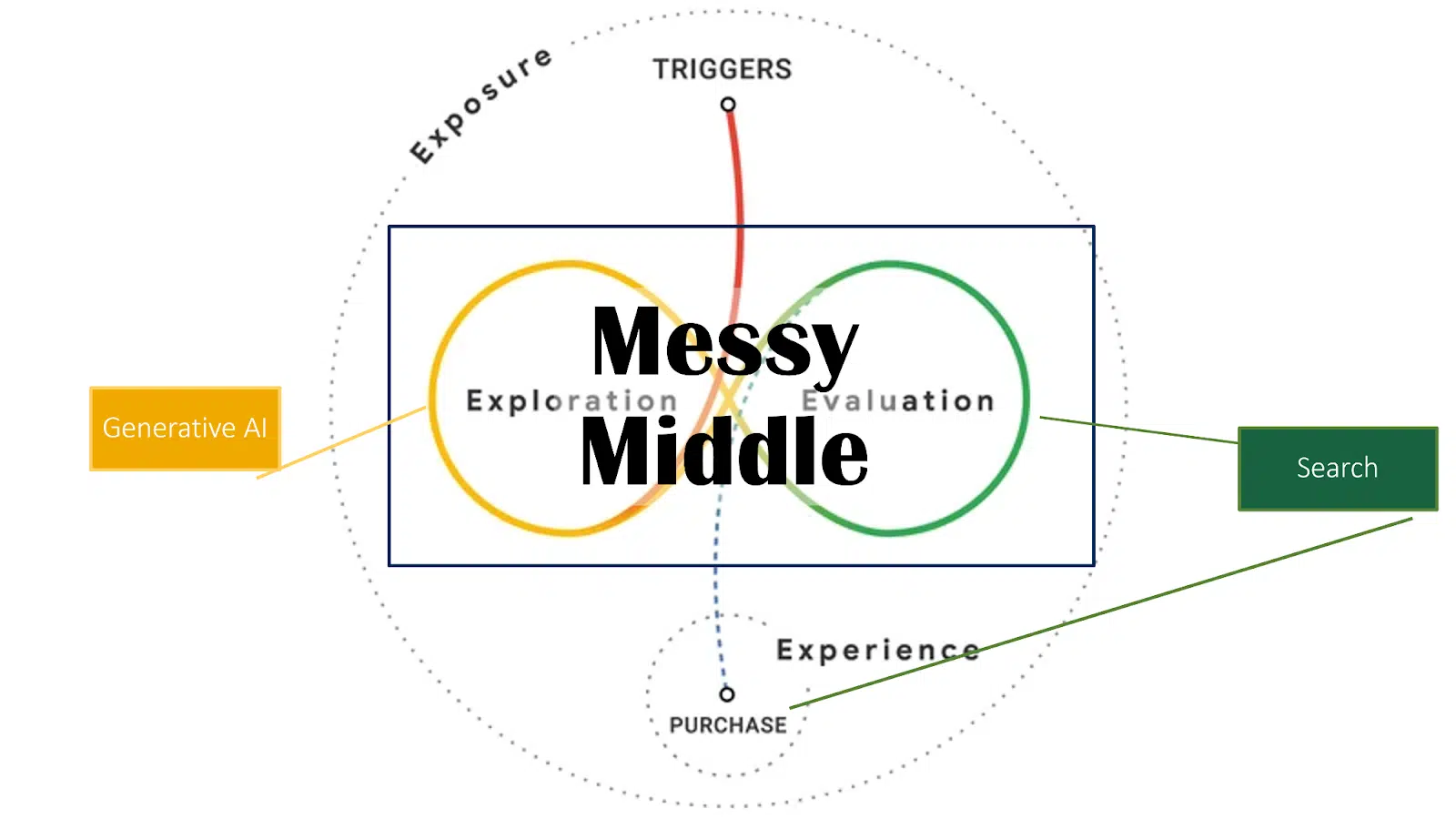

Platforms like ChatGPT, Google AI Overviews, Microsoft Copilot and Perplexity are revolutionizing how customers search and eat data and remodeling how companies and types can acquire visibility in AI-generated content material.

A fast disclaimer: no confirmed strategies exist but on this area.

It’s nonetheless too new, paying homage to the early days of search engine optimisation when search engine rating elements had been unknown and progress relied on testing, analysis and a deep technological understanding of knowledge retrieval and search engines like google and yahoo.

Understanding the panorama of generative AI

Understanding how pure language processing (NLP) and giant language fashions (LLMs) operate is vital on this early stage.

A stable grasp of those applied sciences is important for figuring out future potential in search engine optimisation, digital model constructing and content material methods.

The approaches outlined listed here are primarily based on my analysis of scientific literature, generative AI patents and over a decade of expertise working with semantic search.

How giant language fashions work

Core performance of LLMs

Earlier than partaking with GEO, it’s important to have a fundamental understanding of the expertise behind LLMs.

Very like search engines like google and yahoo, understanding the underlying mechanisms helps keep away from chasing ineffective hacks or false suggestions.

Investing a number of hours to understand these ideas can save assets by steering away from pointless measures.

What makes LLMs revolutionary

LLMs, similar to GPT fashions, Claude or LLaMA, symbolize a transformative leap in search expertise and generative AI.

They alter how search engines like google and yahoo and AI assistants course of and reply to queries by shifting past easy textual content matching to ship nuanced, contextually wealthy solutions.

LLMs reveal exceptional capabilities in language comprehension and reasoning that transcend easy textual content matching to offer extra nuanced and contextual responses, per analysis like Microsoft’s “Massive Search Mannequin: Redefining Search Stack within the Period of LLMs.”

Core performance in search

The core performance of LLMs in search is to course of queries and produce pure language summaries.

As a substitute of simply extracting data from present paperwork, these fashions can generate complete solutions whereas sustaining accuracy and relevance.

That is achieved via a unified framework that treats all (search-related) duties as textual content technology issues.

What makes this method notably highly effective is its potential to customise solutions via pure language prompts. The system first generates an preliminary set of question outcomes, which the LLM refines and improves.

If further data is required, the LLM can generate supplementary queries to gather extra complete knowledge.

The underlying processes of encoding and decoding are key to their performance.

The encoding course of

Encoding entails processing and structuring coaching knowledge into tokens, that are elementary items utilized by language fashions.

Tokens can symbolize phrases, n-grams, entities, photographs, movies or whole paperwork, relying on the applying.

It’s essential to notice, nevertheless, that LLMs don’t “perceive” within the human sense – they course of knowledge statistically slightly than comprehending it.

Remodeling tokens into vectors

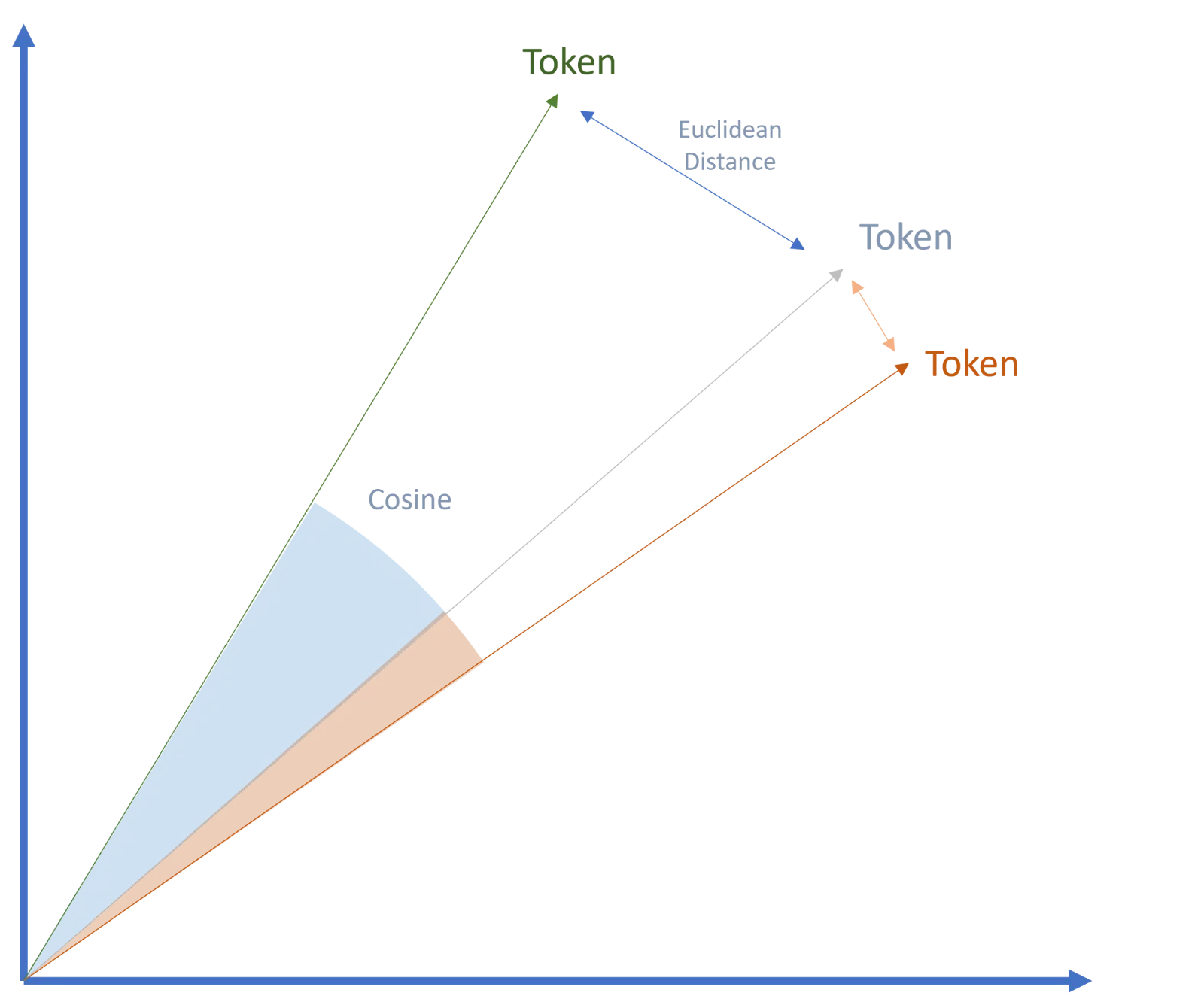

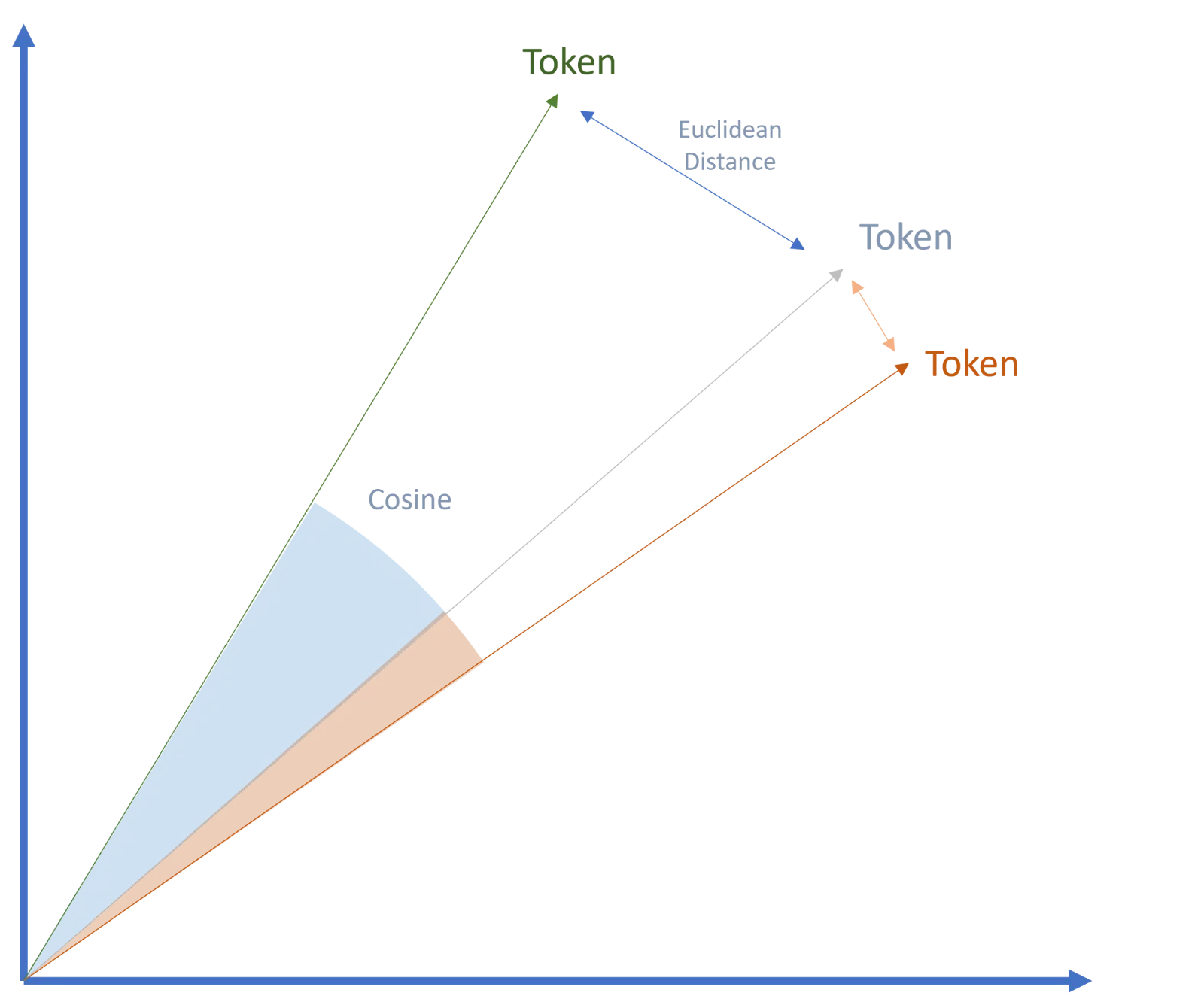

Within the subsequent step, tokens are remodeled into vectors, forming the inspiration of Google’s transformer expertise and transformer-based language fashions.

This breakthrough was a recreation changer in AI and is a key issue within the widespread adoption of AI fashions immediately.

Vectors are numerical representations of tokens, with the numbers capturing particular attributes that describe the properties of every token.

These properties enable vectors to be labeled inside semantic areas and associated to different vectors, a course of often known as embeddings.

The semantic similarity and relationships between vectors can then be measured utilizing strategies like cosine similarity or Euclidean distance.

The decoding course of

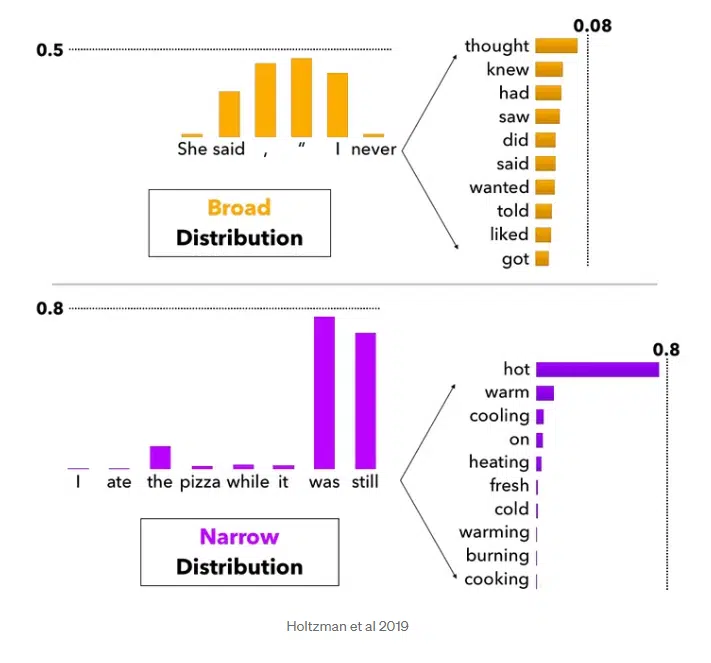

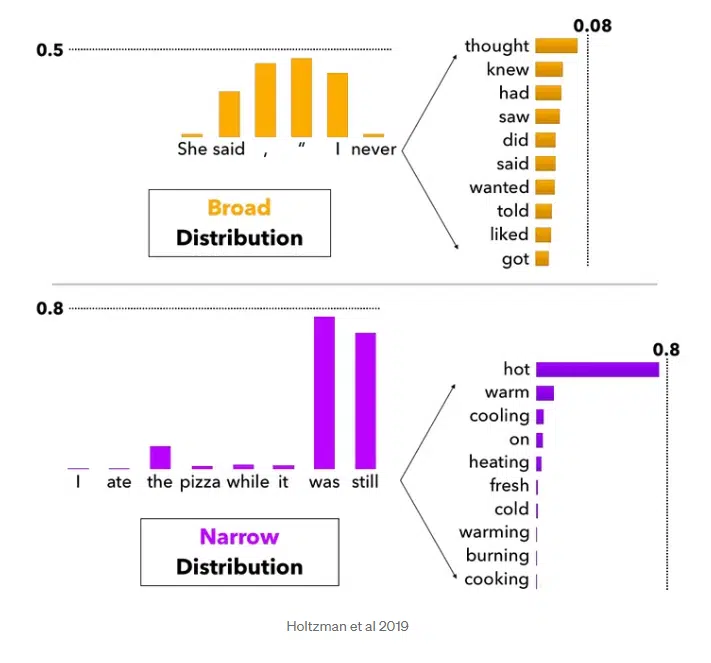

Decoding is about deciphering the possibilities that the mannequin calculates for every doable subsequent token (phrase or image).

The objective is to create essentially the most smart or pure sequence. Totally different strategies, similar to high Ok sampling or high P sampling, can be utilized when decoding.

Probably, subsequent phrases are evaluated with a likelihood rating. Relying on how excessive the “creativity scope” of the mannequin is, the highest Ok phrases are thought of as doable subsequent phrases.

In fashions with a broader interpretation, the next phrases can be taken into consideration along with the High 1 likelihood and thus be extra inventive within the output.

This additionally explains doable completely different outcomes for a similar immediate. With fashions which are “strictly” designed, you’ll at all times get related outcomes.

Past textual content: The multimedia capabilities of generative AI

The encoding and decoding processes in generative AI depend on pure language processing.

Through the use of NLP, the context window could be expanded to account for grammatical sentence construction, enabling the identification of principal and secondary entities throughout pure language understanding.

Generative AI extends past textual content to incorporate multimedia codecs like audio and, often, visuals.

Nevertheless, these codecs are usually remodeled into textual content tokens through the encoding course of for additional processing. (This dialogue focuses on text-based generative AI, which is essentially the most related for GEO purposes.)

Dig deeper: Easy methods to win with generative engine optimization whereas holding search engine optimisation top-tier

Challenges and developments in generative AI

Main challenges for generative AI embody making certain data stays up-to-date, avoiding hallucinations, and delivering detailed insights on particular matters.

Fundamental LLMs are sometimes educated on superficial data, which may result in generic or inaccurate responses to particular queries.

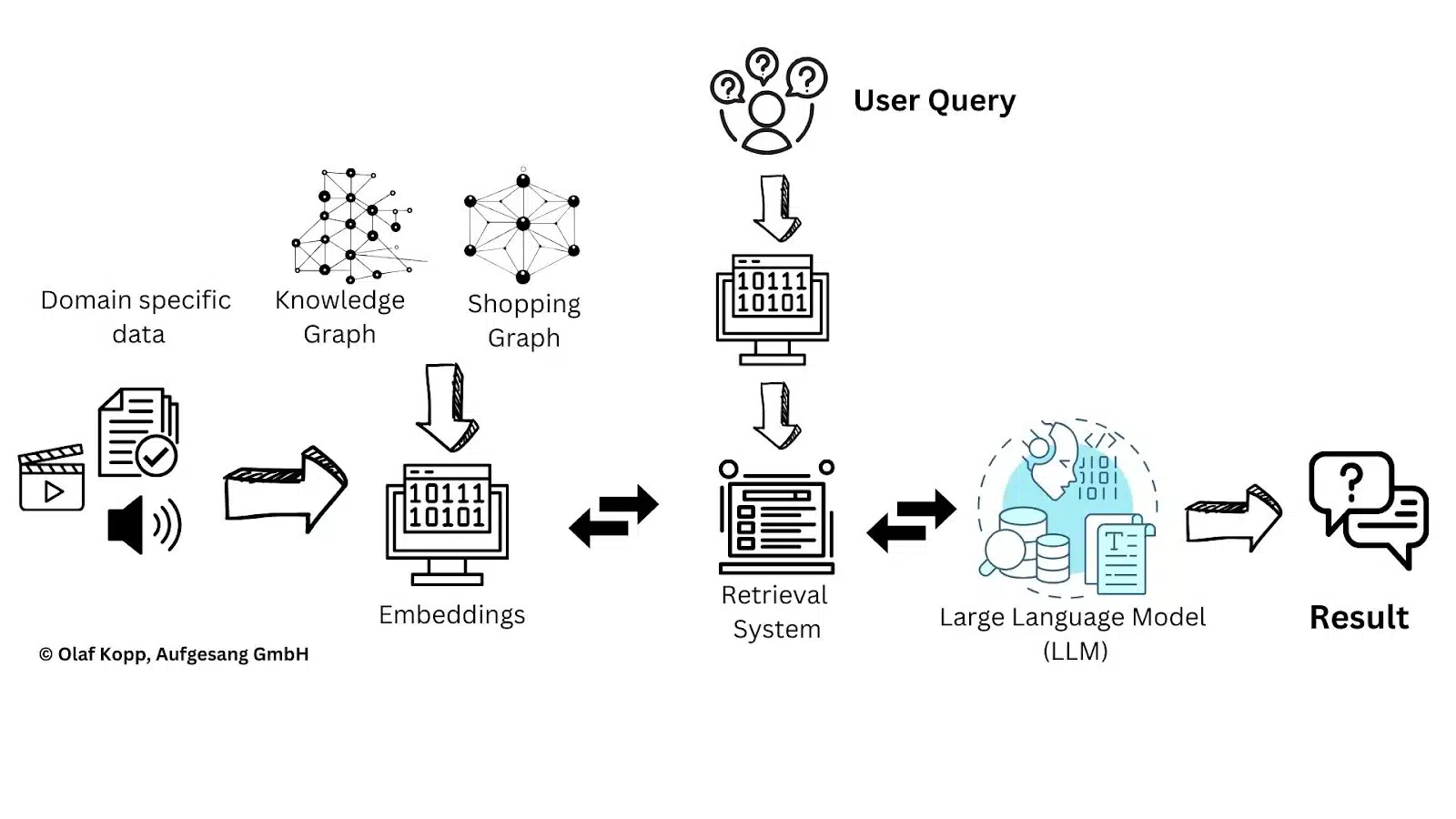

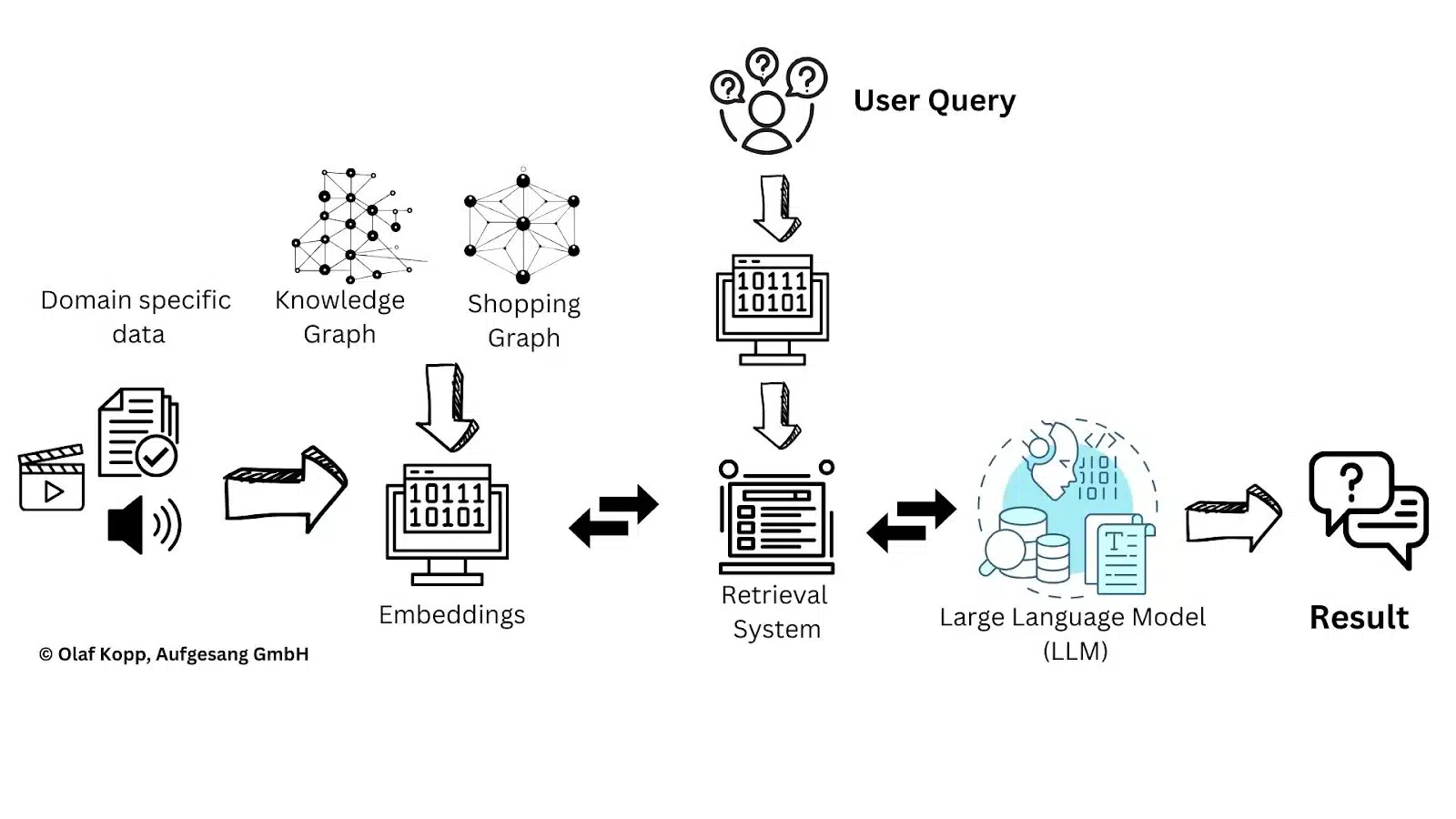

To deal with this, retrieval-augmented technology has develop into a extensively used methodology.

Retrieval-augmented technology: An answer to data challenges

RAG provides LLMs with further topic-specific knowledge, serving to them overcome these challenges extra successfully.

Along with paperwork, topic-specific data can be built-in utilizing information graphs or entity nodes remodeled into vectors.

This permits the inclusion of ontological details about relationships between entities, shifting nearer to true semantic understanding.

RAG affords potential beginning factors for GEO. Whereas figuring out or influencing the sources within the preliminary coaching knowledge could be difficult, GEO permits for a extra focused deal with most popular topic-specific sources.

The important thing query is how completely different platforms choose these sources, which will depend on whether or not their purposes have entry to a retrieval system able to evaluating and choosing sources primarily based on relevance and high quality.

The vital position of retrieval fashions

Retrieval fashions play an important position within the RAG structure by performing as data gatekeepers.

They search via giant datasets to determine related data for textual content technology, functioning like specialised librarians who know precisely which “books” to retrieve on a given subject.

These fashions use algorithms to guage and choose essentially the most pertinent knowledge, enabling the combination of exterior information into textual content technology. This enhances context-rich language output and expands the capabilities of conventional language fashions.

Retrieval methods could be applied via numerous mechanisms, together with:

- Vector embeddings and vector search.

- Doc index databases utilizing strategies like BM25 and TF-IDF.

Retrieval approaches of main AI platforms

Not all methods have entry to such retrieval methods, which presents challenges for RAG.

This limitation could clarify why Meta is now working by itself search engine, which might enable it to leverage RAG inside its LLaMA fashions utilizing a proprietary retrieval system.

Perplexity claims to make use of its personal index and rating methods, although there are accusations that it scrapes or copies search outcomes from different engines like Google.

Claude’s method stays unclear relating to whether or not it makes use of RAG alongside its personal index and user-provided data.

Gemini, Copilot and ChatGPT differ barely. Microsoft and Google leverage their very own search engines like google and yahoo for RAG or domain-specific coaching.

ChatGPT has traditionally used Bing search, however with the introduction of SearchGPT, it’s unsure if OpenAI operates its personal retrieval system.

OpenAI has acknowledged that SearchGPT employs a mixture of search engine applied sciences, together with Microsoft Bing.

“The search mannequin is a fine-tuned model of GPT-4o, post-trained utilizing novel artificial knowledge technology strategies, together with distilling outputs from OpenAI o1-preview. ChatGPT search leverages third-party search suppliers, in addition to content material offered immediately by our companions, to offer the data customers are searching for.”

Microsoft is certainly one of ChatGPT’s companions.

When ChatGPT is requested about the perfect trainers, there may be some overlap between the top-ranking pages in Bing search outcomes and the sources utilized in its solutions, although the overlap is considerably lower than 100%.

Evaluating the retrieval-augmented technology course of

Different elements could affect the analysis of the RAG pipeline.

- Faithfulness: Measures the factual consistency of generated solutions in opposition to the given context.

- Reply relevancy: Evaluates how pertinent the generated reply is to the given immediate.

- Context precision: Assesses whether or not related objects within the contexts are ranked appropriately, with scores from 0-1.

- Side critique:Evaluates submissions primarily based on predefined elements like harmlessness and correctness, with potential to outline customized analysis standards.

- Groundedness: Measures how nicely solutions align with and could be verified in opposition to supply data, making certain claims are substantiated by the context.

- Supply references: Having citations and hyperlinks to unique sources permits verification and helps determine retrieval points.

- Distribution and protection: Guaranteeing balanced illustration throughout completely different supply paperwork and sections via managed sampling.

- Correctness/Factual accuracy: Whether or not generated content material accommodates correct information.

- Imply common precision (MAP): Evaluates the general precision of retrieval throughout a number of queries, contemplating each precision and doc rating. It calculates the imply of common precision scores for every question, the place precision is computed at every place within the ranked outcomes. A better MAP signifies higher retrieval efficiency, with related paperwork showing larger in search outcomes.

- Imply reciprocal rank (MRR): Measures how rapidly the primary related doc seems in search outcomes. It’s calculated by taking the reciprocal of the rank place of the primary related doc for every question, then averaging these values throughout all queries. For instance, if the primary related doc seems at place 4, the reciprocal rank can be 1/4. MRR is especially helpful when the place of the primary right outcome issues most.

- Stand-alone high quality: Evaluates how context-independent and self-contained the content material is, scored 1-5 the place 5 means the content material makes full sense by itself with out requiring further context.

Immediate vs. question

A immediate is extra advanced and aligned with pure language than typical search queries, which are sometimes only a sequence of key phrases.

Prompts are usually framed with specific questions or coherent sentences, offering better context and enabling extra exact solutions.

You will need to distinguish between optimizing for AI Overviews and AI assistant outcomes.

- AI Overviews, a Google SERP characteristic, are typically triggered by search queries.

- Whereas AI assistants depend on extra advanced pure language prompts.

To bridge this hole, the RAG course of should convert the immediate right into a search question within the background, preserving vital context to successfully determine appropriate sources.

Targets and methods of GEO

The objectives of GEO aren’t at all times clearly outlined in discussions.

Some deal with having their very own content material cited in referenced supply hyperlinks, whereas others purpose to have their identify, model or merchandise talked about immediately within the output of generative AI.

Each objectives are legitimate however require completely different methods.

- Being cited in supply hyperlinks entails making certain your content material is referenced.

- Whereas mentions in AI output depend on rising the chance of your entity – whether or not an individual, group or product – being included in related contexts.

A foundational step for each goals is to ascertain a presence amongst most popular or incessantly chosen sources, as it is a prerequisite for attaining both objective.

Do we have to deal with all LLMs?

The various outcomes of AI purposes reveal that every platform makes use of its personal processes and standards for recommending named entities and choosing sources.

Sooner or later, it would seemingly be essential to work with a number of giant language fashions or AI assistants and perceive their distinctive functionalities. For SEOs accustomed to Google’s dominance, this can require an adjustment.

Over the approaching years, it will likely be important to observe which purposes acquire traction in particular markets and industries and to know how every selects its sources.

Why are sure folks, manufacturers or merchandise cited by generative AI?

Within the coming years, extra folks will depend on AI purposes to seek for services and products.

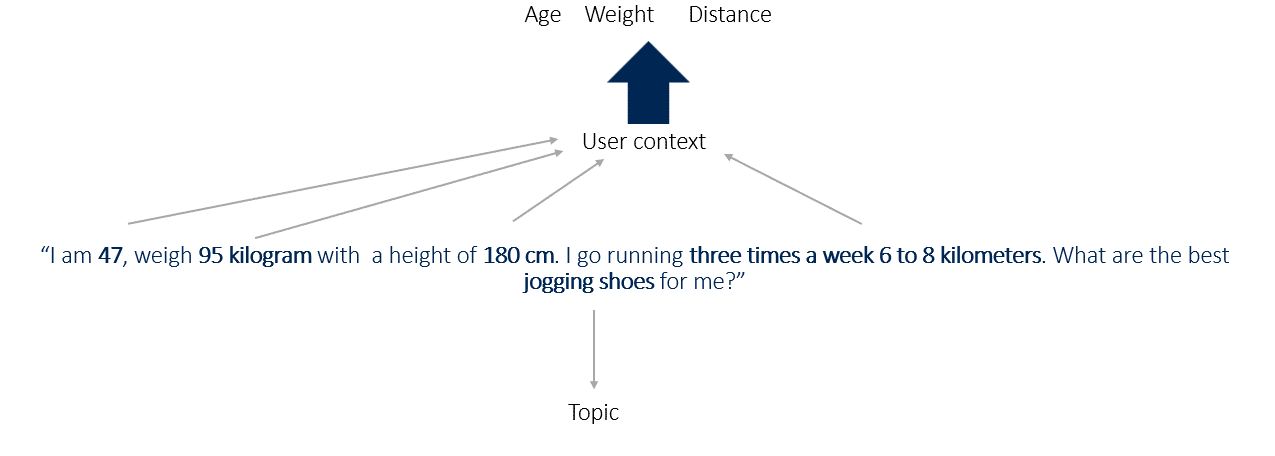

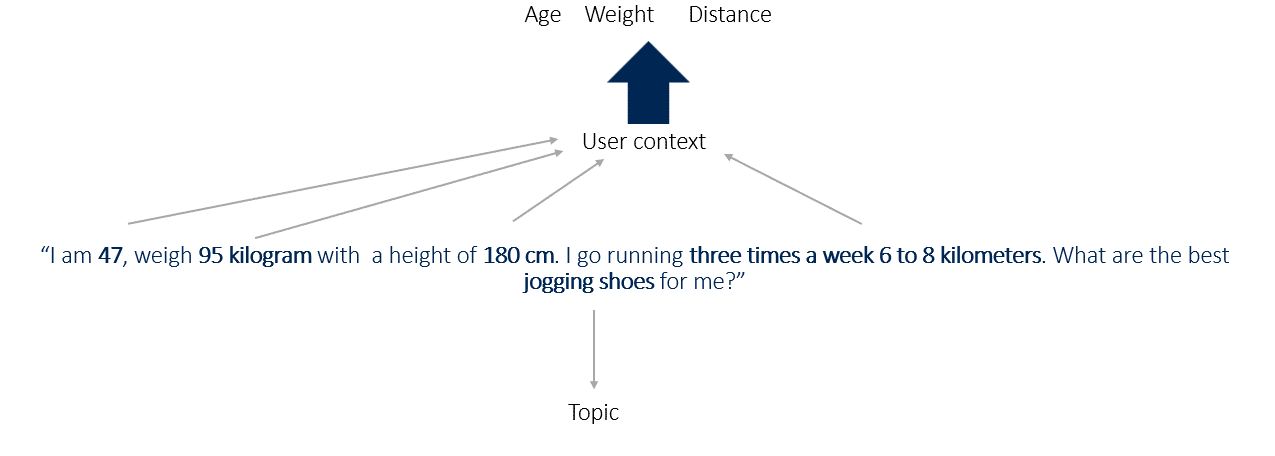

For instance, a immediate like:

- “I’m 47, weigh 95 kilograms, and am 180 cm tall. I’m going operating thrice every week, 6 to eight kilometers. What are the perfect jogging footwear for me?”

This immediate supplies key contextual data, together with age, weight, top and distance as attributes, with jogging footwear as the principle entity.

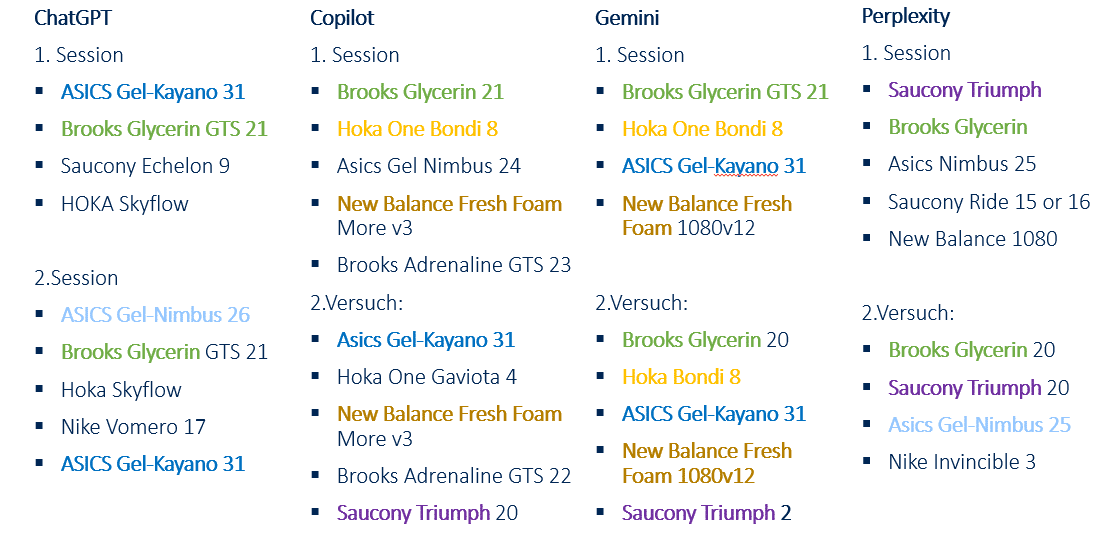

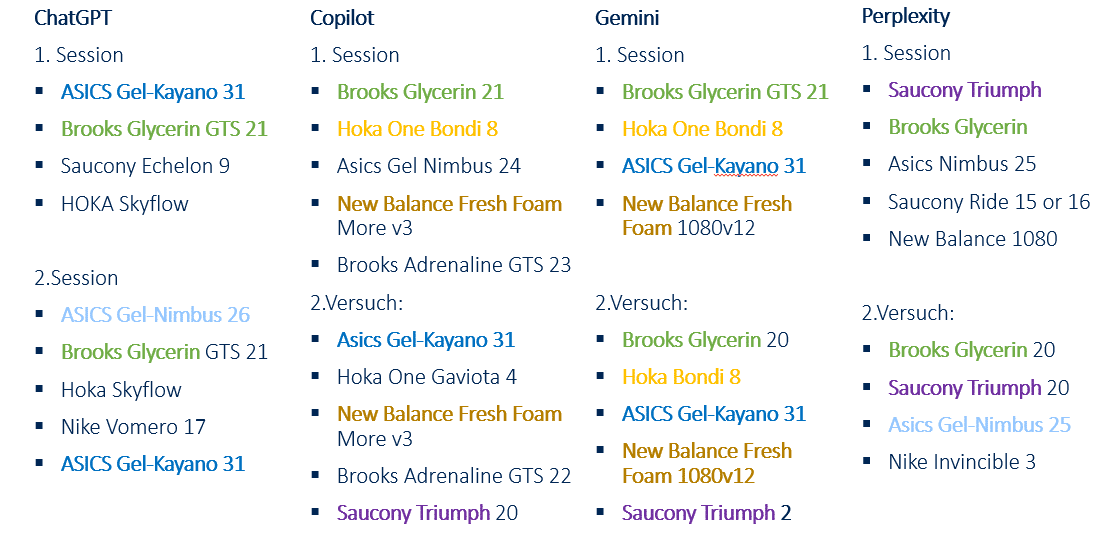

Merchandise incessantly related to such contexts have the next chance of being talked about by generative AI.

Testing platforms like Gemini, Copilot, ChatGPT and Perplexity can reveal which contexts these methods take into account.

Primarily based on the headings of the cited sources, all 4 methods seem to have deduced from the attributes that I’m chubby, producing data from posts with headings like:

- Greatest Operating Sneakers for Heavy Runners (August 2024)

- 7 Greatest Operating Sneakers For Heavy Males in 2024

- Greatest Operating Sneakers for Heavy Males in 2024

- Greatest trainers for heavy feminine runners

- 7 Greatest Lengthy Distance Operating Sneakers in 2024

Copilot

Copilot considers attributes similar to age and weight.

Primarily based on the referenced sources, it identifies an chubby context from this data.

All cited sources are informational content material, similar to exams, opinions and listicles, slightly than ecommerce class or product element pages.

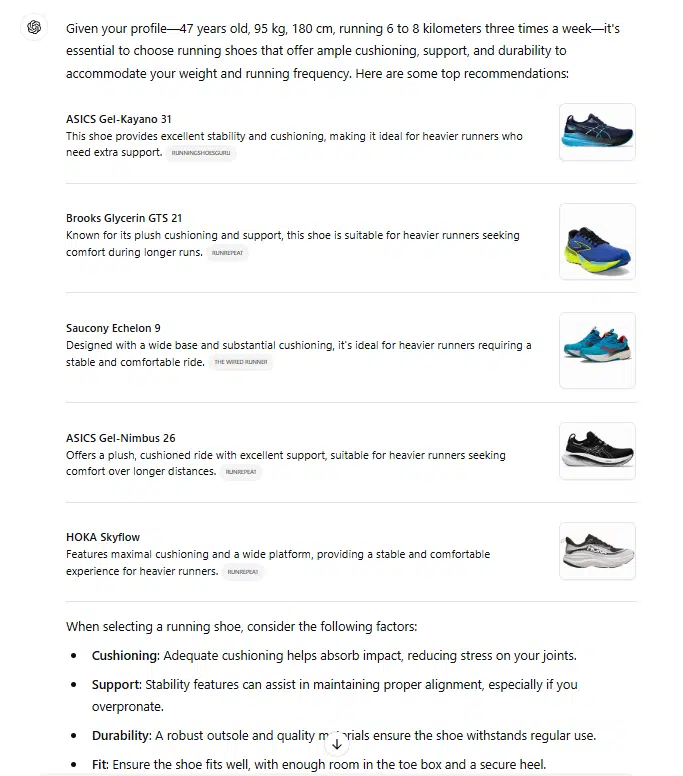

ChatGPT

ChatGPT takes attributes like distance and weight into consideration. From the referenced sources, it derives an chubby and long-distance context.

All cited sources are informational content material, similar to exams, opinions and listicles, slightly than typical store pages like class or product element pages.

Perplexity

Perplexity considers the burden attribute and derives an chubby context from the referenced sources.

The sources embody informational content material, similar to exams, opinions, listicles and typical store pages.

Gemini

Gemini doesn’t immediately present sources within the output. Nevertheless, additional investigation reveals that it additionally processes the contexts of age and weight.

Every main LLM lists completely different merchandise, with just one shoe constantly really useful by all 4 examined AI methods.

All of the methods exhibit a level of creativity, suggesting various merchandise throughout completely different classes.

Notably, Copilot, Perplexity and ChatGPT primarily reference non-commercial sources, similar to store web sites or product element pages, aligning with the immediate’s function.

Claude was not examined additional. Whereas it additionally suggests shoe fashions, its suggestions are primarily based solely on preliminary coaching knowledge with out entry to real-time knowledge or its personal retrieval system.

As you’ll be able to see from the completely different outcomes, every LLM could have its personal course of of choosing sources and content material, making the GEO problem even better.

The suggestions are influenced by co-occurrences, co-mentions and context.

The extra incessantly particular tokens are talked about collectively, the extra seemingly they’re to be contextually associated.

In easy phrases, this will increase the likelihood rating throughout decoding.

Dig deeper: Easy methods to acquire visibility in generative AI solutions: GEO for Perplexity and ChatGPT

Get the e-newsletter search entrepreneurs depend on.

Supply and data choice for retrieval-augmented technology

GEO focuses on positioning merchandise, manufacturers and content material inside the coaching knowledge of LLMs. Understanding the coaching means of LLMs is essential for figuring out potential alternatives for inclusion.

The next insights are drawn from research, patents, scientific paperwork, analysis on E-E-A-T and private evaluation. The central questions are:

- How huge the affect of the retrieval methods is within the RAG course of.

- How essential the preliminary coaching knowledge is.

- What different elements can play a job.

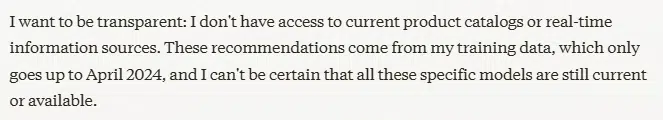

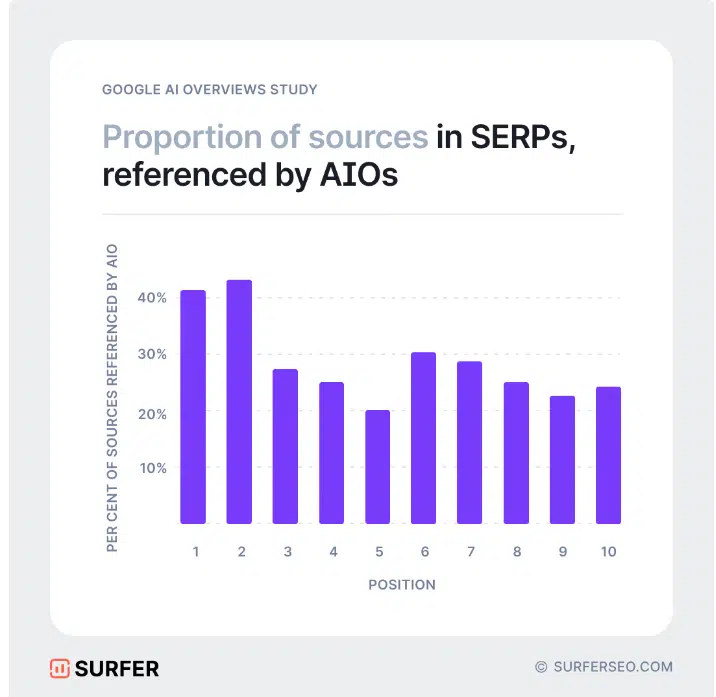

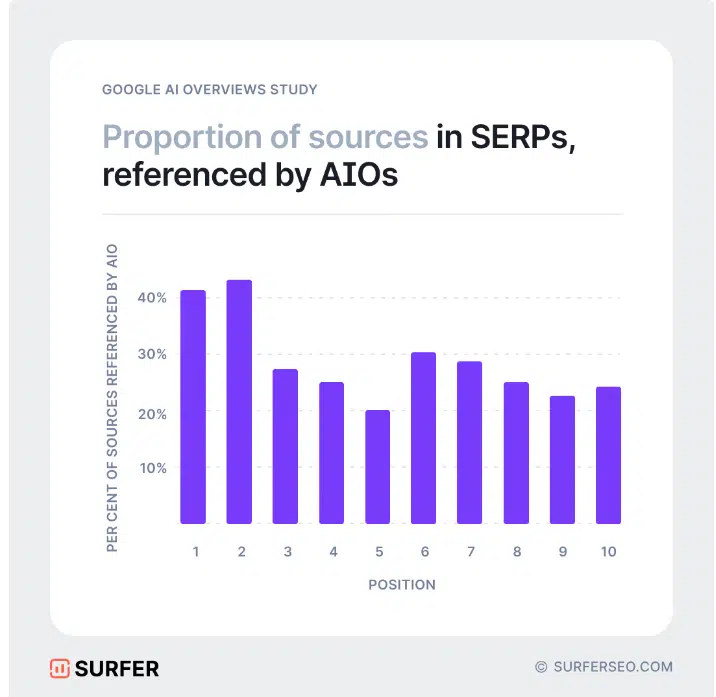

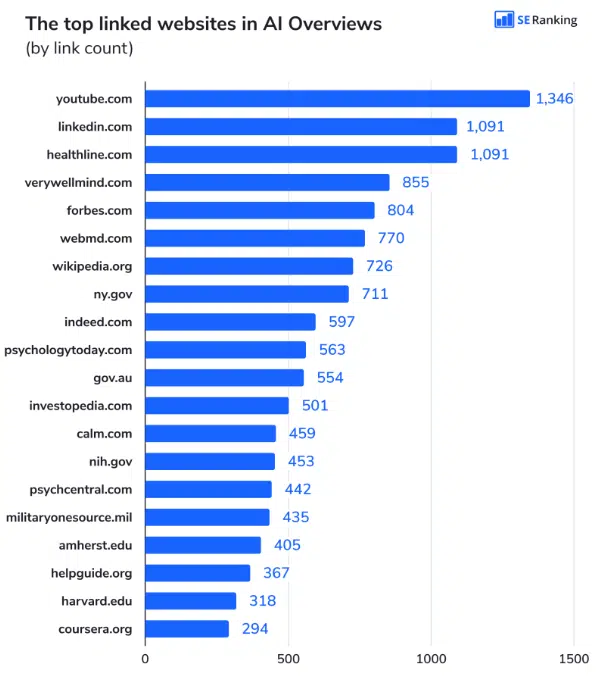

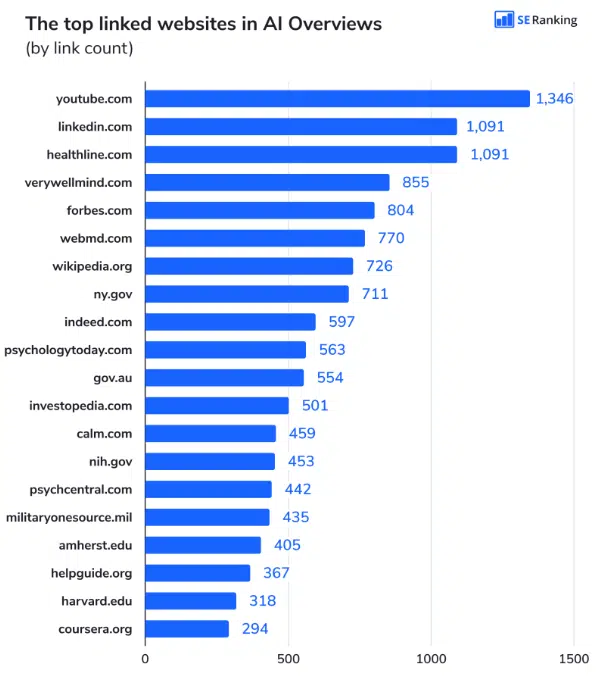

Current research, notably on supply choice for AI Overviews, Perplexity and Copilot, counsel overlaps in chosen sources.

For instance, Google AI Overviews present about 50% overlap in supply choice, as evidenced by research from Wealthy Sanger and Authoritas and Surfer.

The fluctuation vary could be very excessive. The overlap in research from the start of 2024 was nonetheless round 15%. Nevertheless, some research discovered a 99% overlap.

The retrieval system seems to affect roughly 50% of the AI Overviews’ outcomes, suggesting ongoing experimentation to enhance efficiency. This aligns with justified criticism relating to the standard of AI Overview outputs.

The choice of referenced sources in AI solutions highlights the place it’s useful to place manufacturers or merchandise in a contextually acceptable means.

It’s essential to distinguish between sources used through the preliminary coaching of fashions and people added on a topic-specific foundation through the RAG course of.

Inspecting the mannequin coaching course of supplies readability. As an illustration, Google’s Gemini – a multimodal giant language mannequin – processes numerous knowledge sorts, together with textual content, photographs, audio, video and code.

Its coaching knowledge includes internet paperwork, books, code and multimedia, enabling it to carry out advanced duties effectively.

Research on AI Overviews and their most incessantly referenced sources supply insights into which sources Google makes use of for its indices and information graph throughout pre-training, offering alternatives to align content material for inclusion.

Within the RAG course of, domain-specific sources are included to reinforce contextual relevance.

A key characteristic of Gemini is its use of a Combination of Consultants (MoE) structure.

Not like conventional Transformers, which function as a single giant neural community, an MoE mannequin is split into smaller “skilled” networks.

The mannequin selectively prompts essentially the most related skilled paths primarily based on the enter kind, considerably enhancing effectivity and efficiency.

The RAG course of is probably going built-in into this structure.

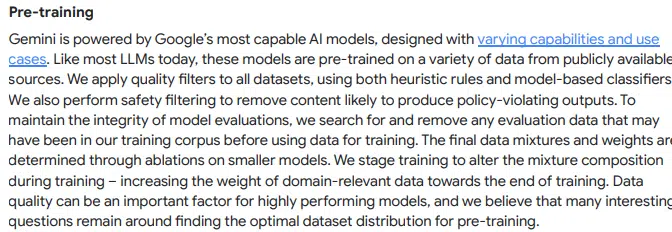

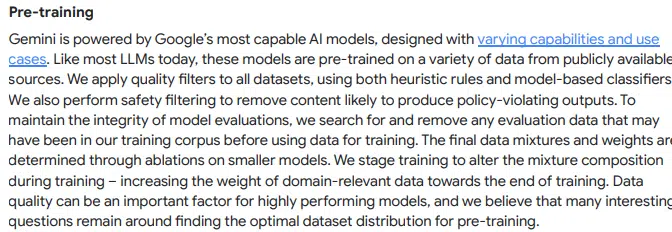

Gemini is developed by Google via a number of coaching phases, using publicly accessible knowledge and specialised strategies to maximise the relevance and precision of its generated content material:

Pre-training

- Much like different giant language fashions (LLMs), Gemini is first pre-trained on numerous public knowledge sources. Google applies numerous filters to make sure knowledge high quality and keep away from problematic content material.

- The coaching considers a versatile choice of seemingly phrases, permitting for extra inventive and contextually acceptable responses.

Supervised fine-tuning (SFT)

- After pre-training, the mannequin is optimized utilizing high-quality examples both created by consultants or generated by fashions after which reviewed by consultants.

- This course of is just like studying good textual content construction and content material by seeing examples of well-written texts.

Reinforcement studying from human suggestions (RLHF)

- The mannequin is additional developed primarily based on human evaluations. A reward mannequin primarily based on consumer preferences helps Gemini acknowledge and be taught most popular response kinds and content material.

Extensions and retrieval augmentation

- Gemini can search exterior knowledge sources similar to Google Search, Maps, YouTube or particular extensions to offer contextual details about the response.

- For instance, when requested about present climate circumstances or information, Gemini might entry Google Search immediately to search out well timed, dependable knowledge and incorporate it into the response.

- Gemini performs search outcomes filtering to pick out essentially the most related data for the reply. The mannequin takes into consideration the contextuality of the question and filters the info in order that it suits the query as intently as doable.

- An instance of this could be a posh technical query the place the mannequin selects outcomes which are scientific or technical in nature slightly than utilizing normal internet content material.

- Gemini combines the data retrieved from exterior sources with the mannequin output.

- This course of entails creating an optimized draft response that attracts on each the mannequin’s prior information and data from the retrieved knowledge sources.

- The mannequin constructions the reply in order that the data is logically introduced collectively and offered in a readable method.

- Every reply undergoes further evaluate to make sure that it meets Google’s high quality requirements and doesn’t include problematic or inappropriate content material.

- This safety examine is complemented by a rating that favors the very best quality variations of the reply. The mannequin then presents the highest-ranked reply to the consumer.

Person suggestions and steady optimization

- Google constantly integrates suggestions from customers and consultants to adapt the mannequin and repair any weak factors.

One risk is that AI purposes entry present retrieval methods and use their search outcomes.

Research counsel {that a} robust rating within the respective search engine will increase the chance of being cited as a supply in linked AI purposes.

Nevertheless, as famous, the overlaps don’t but present a transparent correlation between high rankings and referenced sources.

One other criterion seems to affect supply choice.

Google’s method, for instance, emphasizes adherence to high quality requirements when selecting sources for pre-training and RAG.

The usage of classifiers can also be talked about as an element on this course of.

When naming classifiers, a bridge could be made to E-E-A-T, the place high quality classifiers are additionally used.

Info from Google relating to post-training additionally references utilizing E-E-A-T in classifying sources in response to high quality.

The reference to evaluators connects to the position of high quality raters in assessing E-E-A-T.

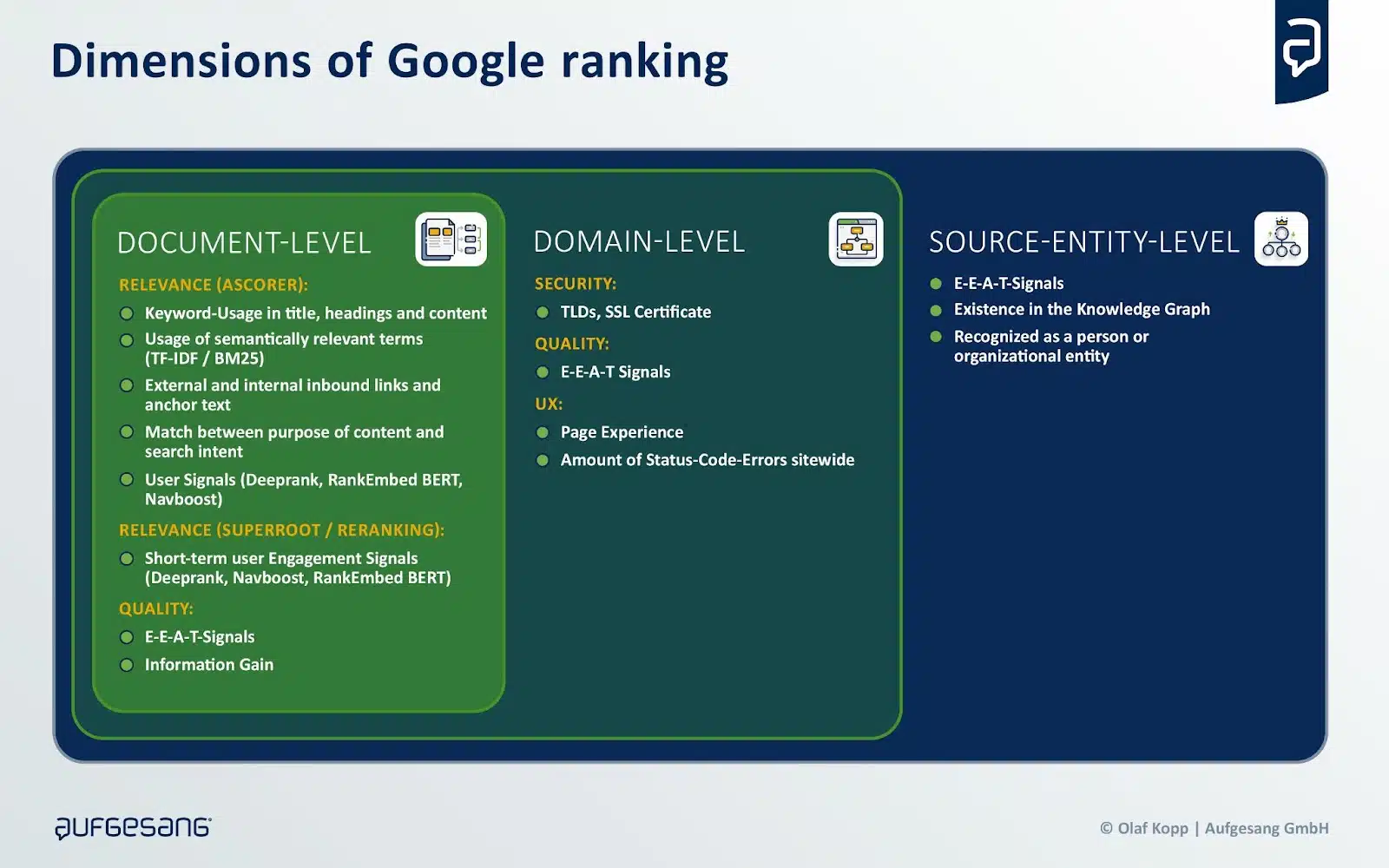

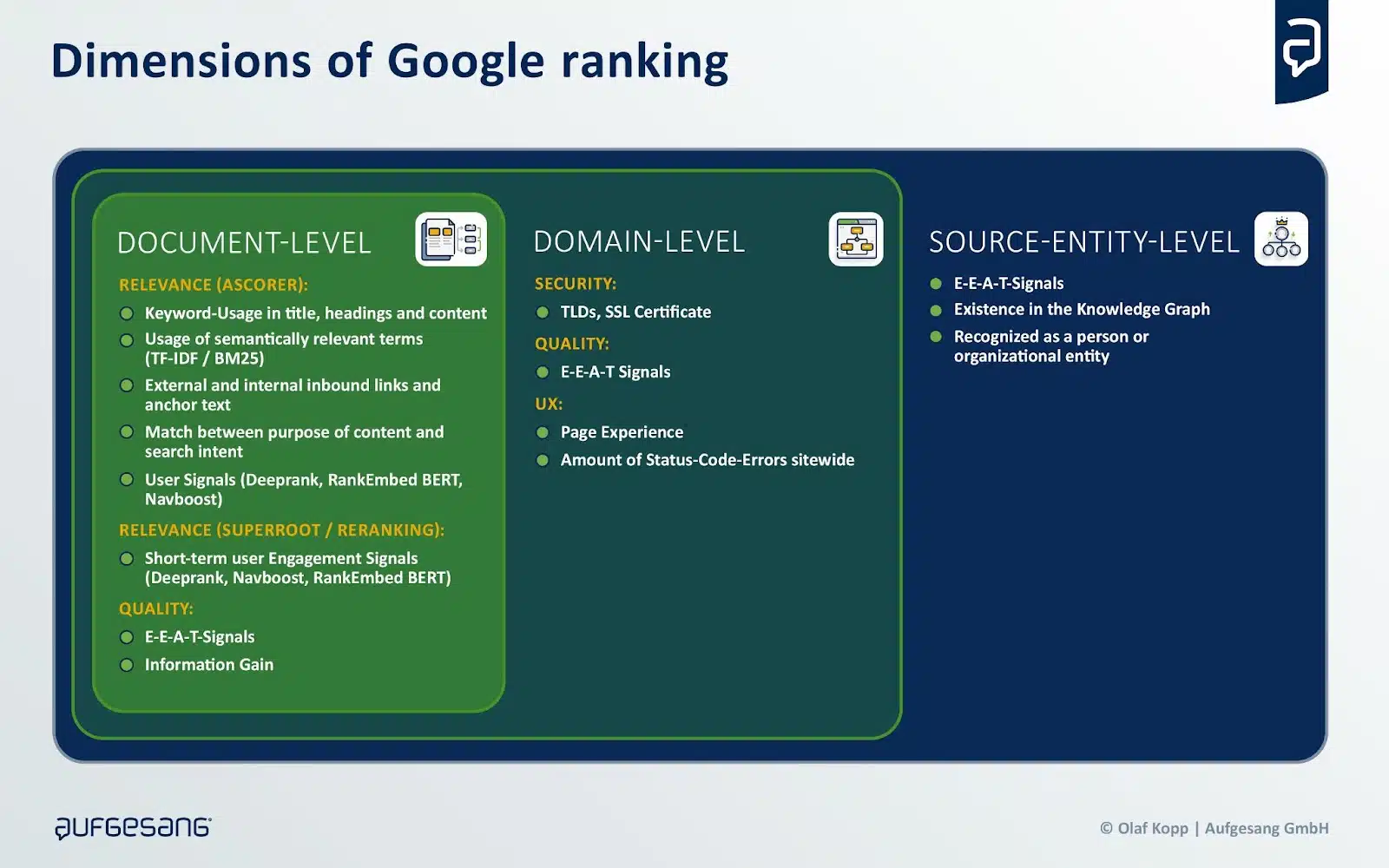

Rankings in most search engines like google and yahoo are influenced by relevance and high quality on the doc, area and creator or supply entity ranges.

Sources could also be chosen much less for relevance and extra for high quality on the area and supply entity degree.

This is able to additionally make sense, as extra advanced prompts should be rewritten within the background in order that acceptable search queries are created to question the rankings.

Whereas relevance is query-dependent, high quality stays constant.

This distinction helps clarify the weak correlation between rankings and sources referenced by generative AI and why lower-ranking sources are typically included.

To evaluate high quality, search engines like google and yahoo like Google and Bing depend on classifiers, together with Google’s E-E-A-T framework.

Google has emphasised that E-E-A-T varies by topic space, necessitating topic-specific methods, notably in GEO methods.

Referenced area sources differ by {industry} or subject, with platforms like Wikipedia, Reddit and Amazon taking part in various roles, in response to a BrightEdge examine.

Thus, industry- and topic-specific elements should be built-in into positioning methods.

Dig deeper: Easy methods to implement generative engine optimization (GEO) methods

Tactical and strategic approaches for LLMO / GEO

As beforehand famous, there are not any confirmed success tales but for influencing the outcomes of generative AI.

Platform operators themselves appear unsure about tips on how to qualify the sources chosen through the RAG course of.

These factors underscore the significance of figuring out the place optimization efforts ought to focus – particularly, figuring out which sources are sufficiently reliable and related to prioritize.

The following problem is knowing tips on how to set up your self as a type of sources.

The analysis paper “GEO: Generative Engine Optimization” launched the idea of GEO, exploring how generative AI outputs could be influenced and figuring out the elements answerable for this.

In keeping with the examine, the visibility and effectiveness of GEO could be enhanced by the next elements:

- Authority in writing: Improves efficiency, notably on debate questions and queries in historic contexts, as extra persuasive writing is prone to have extra worth in debate-like contexts.

- Citations (cite sources): Significantly useful for factual questions, as they supply a supply of verification for the information offered, thereby rising the credibility of the reply.

- Statistical addition: Significantly efficient in fields similar to Legislation, Authorities and Opinion, the place incorporating related statistics into webpage content material can improve visibility in particular contexts.

- Citation addition: Most impactful in areas like Individuals and Society, Explanations and Historical past, seemingly as a result of these matters typically contain private narratives or historic occasions the place direct quotes add authenticity and depth.

These elements fluctuate in effectiveness relying on the area, suggesting that incorporating domain-specific, focused customizations into internet pages is important for elevated visibility.

The next tactical dos for GEO and LLMO could be derived from the paper:

- Use citable sources: Incorporate citable sources into your content material to extend credibility and authenticity, particularly factual ones

- Insert statistics: Add related statistics to strengthen your arguments, particularly in areas like Legislation and Authorities and opinion questions.

- Add quotes: Use quotes to counterpoint content material in areas similar to Individuals and Society, Explanations and Historical past as they add authenticity and depth.

- Area-specific optimization: Think about the specifics of your area when optimizing, because the effectiveness of GEO strategies varies relying on the realm.

- Give attention to content material high quality: Give attention to creating high-quality, related and informative content material that gives worth to customers.

Moreover, tactical don’ts can be recognized:

- Keep away from key phrase stuffing: Conventional key phrase stuffing exhibits little to no enchancment in generative search engine responses and ought to be prevented.

- Don’t ignore the context: Keep away from producing content material that’s unrelated to the subject or doesn’t present any added worth for the consumer.

- Don’t overlook consumer intent: Don’t neglect the intent behind search queries. Ensure your content material truly solutions customers’ questions.

BrightEdge has outlined the next strategic issues primarily based on the aforementioned analysis:

Totally different impacts of backlinks and co-citations

- AI Overviews and Perplexity favor distinct area units relying on the {industry}.

- In healthcare and schooling, each platforms prioritize trusted sources like mayoclinic.org and coursera.com, making these or related domains key targets for efficient search engine optimisation methods.

- Conversely, in sectors like ecommerce and finance, Perplexity exhibits a desire for domains similar to reddit.com, yahoo.com, and marketwatch.com.

- Tailoring search engine optimisation efforts to those preferences by leveraging backlinks and co-citations can considerably improve efficiency.

Tailor-made methods for AI-powered search

- AI-powered search approaches should be custom-made for every {industry}.

- As an illustration, Perplexity’s desire for reddit.com underscores the significance of group insights in ecommerce, whereas AI Overviews leans towards established evaluate and Q&A websites like consumerreports.org and quora.com.

- Entrepreneurs and SEOs ought to align their content material methods with these tendencies by creating detailed product opinions or fostering Q&A boards to assist ecommerce manufacturers.

Anticipate adjustments within the quotation panorama

- SEOs should intently monitor Perplexity’s most popular domains, particularly the platform’s reliance on reddit.com for community-driven content material.

- Google’s partnership with Reddit might affect Perplexity’s algorithms to prioritize Reddit’s content material additional. This pattern signifies a rising emphasis on user-generated content material.

- SEOs ought to stay proactive and adaptable, refining methods to align with Perplexity’s evolving quotation preferences to keep up relevance and effectiveness.

Under are industry-specific tactical and strategic measures for GEO.

B2B tech

- Set up a presence on authoritative tech domains, notably techtarget.com, ibm.com, microsoft.com and cloudflare.com, that are acknowledged as trusted sources by each platforms.

- Leverage content material syndication on these established platforms to get cited as a trusted supply quicker.

- In the long run, construct your personal area authority via high-quality content material, as competitors for syndication spots will enhance.

- Enter into partnerships with main tech platforms and actively contribute content material there.

- Display experience via credentials, certifications and skilled opinions to sign trustworthiness.

Ecommerce

- Set up a powerful presence on Amazon, as Perplexity’s platform is extensively used as a supply.

- Actively promote product opinions and user-generated content material on Amazon and different related platforms.

- Distribute product data by way of established seller platforms and comparability websites

- Syndicate content material and accomplice with trusted domains.

- Keep detailed and up-to-date product descriptions on all gross sales platforms.

- Become involved on related specialist portals and group platforms similar to Reddit.

- Pursue a balanced advertising and marketing technique that depends on each exterior platforms and your personal area authority.

Persevering with schooling

- Construct reliable sources and collaborate with authoritative domains similar to coursera.org, usnews.com and bestcolleges.com, as these are thought of related by each methods.

- Create up-to-date, high-quality content material that AI methods classify as reliable. The content material ought to be clearly structured and supported by skilled information.

- Construct an energetic presence on related platforms like Reddit as community-driven content material turns into more and more essential.

- Optimize your personal content material for AI methods via clear structuring, clear headings and concise solutions to widespread consumer questions.

- Clearly spotlight high quality options similar to certifications and accreditations, as these enhance credibility.

Finance

- Construct a presence on reliable monetary portals similar to yahoo.com and marketwatch.com, as these are most popular sources by AI methods.

- Keep present and correct firm data on main platforms similar to Yahoo Finance.

- Create high-quality, factually right content material and assist it with references to acknowledged sources.

- Construct an energetic presence in related Reddit communities as Reddit features traction as a supply for AI methods.

- Enter into partnerships with established monetary media to extend your personal visibility and credibility.

- Display experience via specialist information, certifications and skilled opinions.

Well being

- Hyperlink and reference content material to trusted sources similar to mayoclinic.org, nih.gov and medlineplus.gov.

- Incorporate present medical analysis and traits into the content material.

- Present complete and well-researched medical data backed by official establishments.

- Depend on credibility and experience via certifications and {qualifications}.

- Conduct common content material updates with new medical findings.

- Pursue a balanced content material technique that each builds your personal area authority and leverages established healthcare platforms.

Insurance coverage

- Use reliable sources: Place content material on acknowledged domains similar to forbes.com and official authorities web sites (.gov), as these are thought of notably credible by AI search engines like google and yahoo.

- Present present and correct data: Insurance coverage data should at all times be present and factually right. This notably applies to product and repair descriptions.

- Content material syndication: Publish content material on authoritative platforms similar to Forbes or acknowledged specialist portals with the intention to be cited as a reliable supply extra rapidly.

- Emphasize native relevance: Content material ought to be tailored to regional markets and take native insurance coverage rules into consideration.

Eating places

- Construct and preserve a powerful presence on key evaluate platforms similar to Yelp, TripAdvisor, OpenTable and GrubHub.

- Actively promote and gather constructive scores and opinions from friends.

- Present full and up-to-date data on these platforms (menus, opening occasions, pictures, and so on.).

- Work together with meals communities and specialised gastro platforms similar to Eater.com.

- Carry out native search engine optimisation optimization as AI searches place a powerful emphasis on native relevance.

- Create and replace complete and well-maintained Wikipedia entries.

- Provide a seamless on-line reservation course of by way of related platforms.

- Present high-quality content material in regards to the restaurant on numerous channels.

Tourism / Journey

- Optimize presence on key journey platforms similar to TripAdvisor, Expedia, Kayak, Lodges.com and Reserving.com, as they’re seen as trusted sources by AI search engines like google and yahoo.

- Create complete content material with journey guides, ideas and genuine opinions.

- Optimize the reserving course of and make it user-friendly.

- Carry out native search engine optimisation since AI searches are sometimes location-based.

- Be energetic on related platforms and encourage opinions.

- Offering high-quality content material with added worth for the consumer.

- Collaborate with trusted domains and companions.

The way forward for GEO and what it means for manufacturers

The importance of GEO for corporations hinges on whether or not future generations will adapt their search habits and shift from Google to different platforms.

Rising traits on this space ought to develop into obvious within the coming years, probably affecting the search market share.

As an illustration, ChatGPT Search depends closely on Microsoft Bing’s search expertise.

If ChatGPT establishes itself as a dominant generative AI software, rating nicely on Microsoft Bing might develop into vital for corporations aiming to affect AI-driven purposes.

This improvement might supply Microsoft Bing a chance to achieve market share not directly.

Whether or not LLMO or GEO will evolve right into a viable technique for steering LLMs towards particular objectives stays unsure.

Nevertheless, if it does, attaining the next goals might be important:

- Establishing owned media as a supply for LLM coaching knowledge via E-E-A-T ideas.

- Producing mentions of the model and its merchandise in respected media.

- Creating co-occurrences of the model with related entities and attributes in authoritative media.

- Producing high-quality content material that ranks nicely and is taken into account in RAG processes.

- Guaranteeing inclusion in established graph databases just like the Information Graph or Buying Graph.

The success of LLM optimization correlates with market measurement. In area of interest markets, it’s simpler to place a model inside its thematic context because of diminished competitors.

Fewer co-occurrences in certified media are required to affiliate the model with related attributes and entities in LLMs.

Conversely, in bigger markets, attaining this is more difficult as a result of opponents typically have in depth PR and advertising and marketing assets and a well-established presence.

Implementing GEO or LLMO calls for considerably better assets than conventional search engine optimisation, because it entails influencing public notion at scale.

Corporations should strategically put together for these shifts, which is the place frameworks like digital authority administration come into play. This idea helps organizations align structurally and operationally to reach an AI-driven future.

Sooner or later, giant manufacturers are prone to maintain substantial benefits in search engine rankings and generative AI outputs because of their superior PR and advertising and marketing assets.

Nevertheless, conventional search engine optimisation can nonetheless play a job in coaching LLMs by leveraging high-ranking content material.

The extent of this affect will depend on how retrieval methods weigh content material within the coaching course of.

Finally, corporations ought to prioritize the co-occurrence of their manufacturers/merchandise with related attributes and entities whereas optimizing for these relationships in certified media.

Dig deeper: 5 GEO traits shaping the way forward for search

Contributing authors are invited to create content material for Search Engine Land and are chosen for his or her experience and contribution to the search group. Our contributors work below the oversight of the editorial employees and contributions are checked for high quality and relevance to our readers. The opinions they specific are their very own.