In 2014, the British thinker Nick Bostrom revealed a e book about the way forward for synthetic intelligence with the ominous title Superintelligence: Paths, Risks, Methods. It proved extremely influential in selling the concept superior AI methods—“superintelligences” extra succesful than people—would possibly in the future take over the world and destroy humanity.

A decade later, OpenAI boss Sam Altman says superintelligence might solely be “just a few thousand days” away. A yr in the past, Altman’s OpenAI cofounder Ilya Sutskever arrange a staff throughout the firm to deal with “secure superintelligence,” however he and his staff have now raised a billion {dollars} to create a startup of their very own to pursue this purpose.

What precisely are they speaking about? Broadly talking, superintelligence is something extra clever than people. However unpacking what which may imply in observe can get a bit tough.

Totally different Sorts of AI

In my opinion, essentially the most helpful manner to consider totally different ranges and sorts of intelligence in AI was developed by US laptop scientist Meredith Ringel Morris and her colleagues at Google.

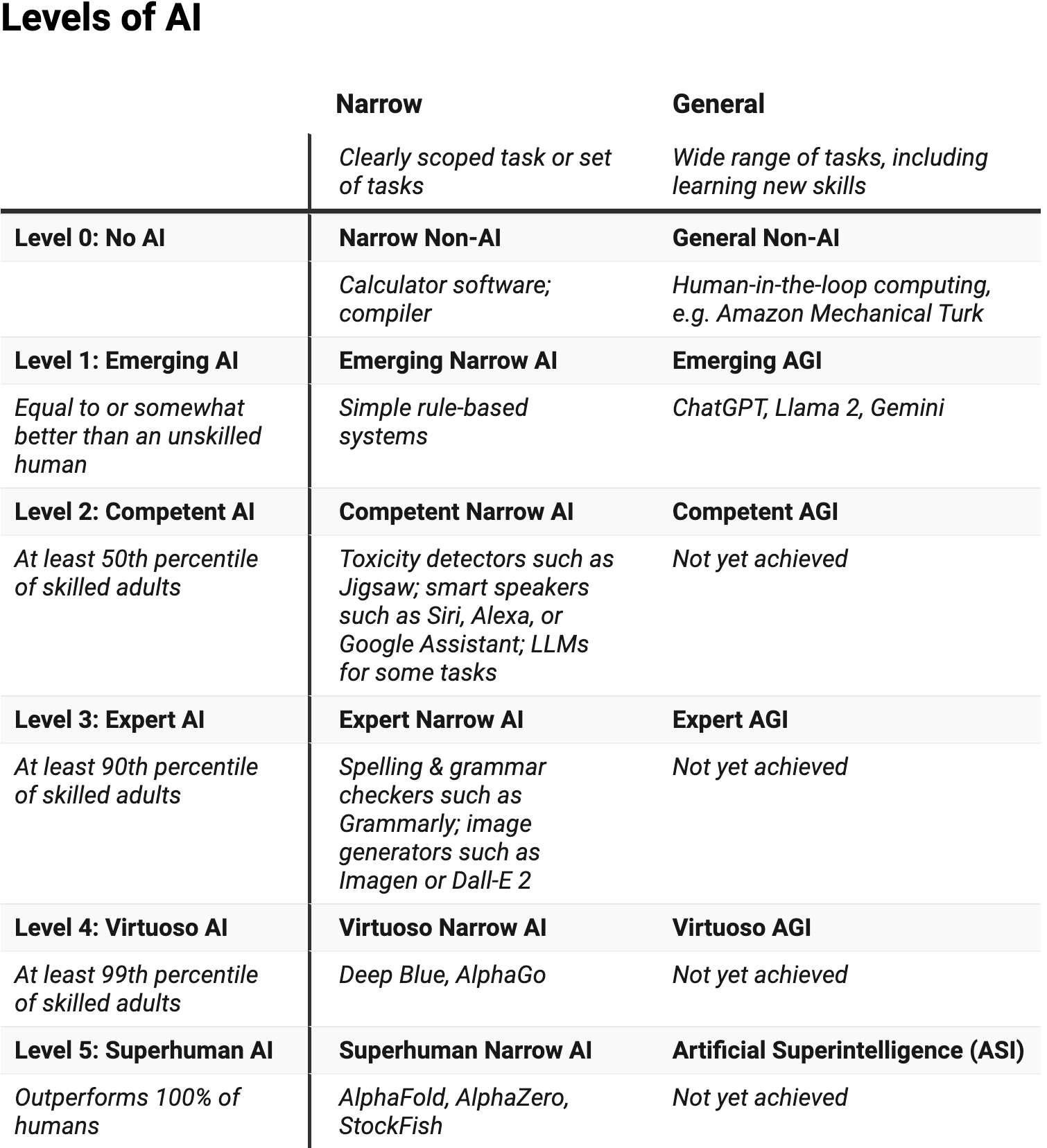

Their framework lists six ranges of AI efficiency: no AI, rising, competent, professional, virtuoso, and superhuman. It additionally makes an essential distinction between slender methods, which may perform a small vary of duties, and extra common methods.

A slender, no-AI system is one thing like a calculator. It carries out numerous mathematical duties in accordance with a set of explicitly programmed guidelines.

There are already loads of very profitable slender AI methods. Morris offers the Deep Blue chess program that famously defeated world champion Garry Kasparov manner again in 1997 for instance of a virtuoso-level slender AI system.

Some slender methods even have superhuman capabilities. One instance is AlphaFold, which makes use of machine studying to foretell the construction of protein molecules, and whose creators received the Nobel Prize in Chemistry this yr.What about common methods? That is software program that may deal with a a lot wider vary of duties, together with issues like studying new abilities.

A common no-AI system is likely to be one thing like Amazon’s Mechanical Turk: It may do a variety of issues, nevertheless it does them by asking actual individuals.

General, common AI methods are far much less superior than their slender cousins. In line with Morris, the state-of-the-art language fashions behind chatbots resembling ChatGPT are common AI—however they’re to this point on the “rising” degree (that means they’re “equal to or considerably higher than an unskilled human”), and but to succeed in “competent” (nearly as good as 50 % of expert adults).

So by this reckoning, we’re nonetheless a ways from common superintelligence.

How Clever Is AI Proper Now?

As Morris factors out, exactly figuring out the place any given system sits would depend upon having dependable checks or benchmarks.

Relying on our benchmarks, an image-generating system resembling DALL-E is likely to be at virtuoso degree (as a result of it may produce photos 99 % of people couldn’t draw or paint), or it is likely to be rising (as a result of it produces errors no human would, resembling mutant palms and not possible objects).

There’s important debate even concerning the capabilities of present methods. One notable 2023 paper argued GPT-4 confirmed “sparks of synthetic common intelligence.”

OpenAI says its newest language mannequin, o1, can “carry out complicated reasoning” and “rivals the efficiency of human specialists” on many benchmarks.

Nevertheless, a latest paper from Apple researchers discovered o1 and plenty of different language fashions have important hassle fixing real mathematical reasoning issues. Their experiments present the outputs of those fashions appear to resemble refined pattern-matching relatively than true superior reasoning. This means superintelligence is just not as imminent as many have prompt.

Will AI Hold Getting Smarter?

Some individuals suppose the fast tempo of AI progress over the previous few years will proceed and even speed up. Tech corporations are investing a whole bunch of billions of {dollars} in AI {hardware} and capabilities, so this doesn’t appear not possible.

If this occurs, we might certainly see common superintelligence throughout the “few thousand days” proposed by Sam Altman (that’s a decade or so in much less sci-fi phrases). Sutskever and his staff talked about an identical timeframe of their superalignment article.

Many latest successes in AI have come from the appliance of a way referred to as “deep studying,” which, in simplistic phrases, finds associative patterns in gigantic collections of information. Certainly, this yr’s Nobel Prize in Physics has been awarded to John Hopfield and in addition the “Godfather of AI” Geoffrey Hinton, for his or her invention of the Hopfield community and Boltzmann machine, that are the muse of many highly effective deep studying fashions used right now.

Basic methods resembling ChatGPT have relied on information generated by people, a lot of it within the type of textual content from books and web sites. Enhancements of their capabilities have largely come from rising the dimensions of the methods and the quantity of information on which they’re educated.

Nevertheless, there is probably not sufficient human-generated information to take this course of a lot additional (though efforts to make use of information extra effectively, generate artificial information, and enhance switch of abilities between totally different domains might deliver enhancements). Even when there have been sufficient information, some researchers say language fashions resembling ChatGPT are basically incapable of reaching what Morris would name common competence.

One latest paper has prompt a vital characteristic of superintelligence could be open-endedness, no less than from a human perspective. It will want to have the ability to repeatedly generate outputs {that a} human observer would regard as novel and be capable of be taught from.

Present basis fashions usually are not educated in an open-ended manner, and current open-ended methods are fairly slender. This paper additionally highlights how both novelty or learnability alone is just not sufficient. A brand new sort of open-ended basis mannequin is required to attain superintelligence.

What Are the Dangers?

So what does all this imply for the dangers of AI? Within the quick time period, no less than, we don’t want to fret about superintelligent AI taking on the world.

However that’s to not say AI doesn’t current dangers. Once more, Morris and co have thought this by means of: As AI methods achieve nice functionality, they could additionally achieve higher autonomy. Totally different ranges of functionality and autonomy current totally different dangers.

For instance, when AI methods have little autonomy and folks use them as a sort of guide—after we ask ChatGPT to summarize paperwork, say, or let the YouTube algorithm form our viewing habits—we’d face a threat of over-trusting or over-relying on them.

Within the meantime, Morris factors out different dangers to be careful for as AI methods turn into extra succesful, starting from individuals forming parasocial relationships with AI methods to mass job displacement and society-wide ennui.

What’s Subsequent?

Let’s suppose we do in the future have superintelligent, totally autonomous AI brokers. Will we then face the chance they may focus energy or act towards human pursuits?

Not essentially. Autonomy and management can go hand in hand. A system could be extremely automated, but present a excessive degree of human management.

Like many within the AI analysis group, I imagine secure superintelligence is possible. Nevertheless, constructing it will likely be a fancy and multidisciplinary activity, and researchers must tread unbeaten paths to get there.

This text is republished from The Dialog underneath a Artistic Commons license. Learn the authentic article.