Tucked away within the Settings part of Google Search Console is a report few search engine marketing professionals talk about, however I like to observe.

These reviews are referred to as Crawl Stats.

Right here, you’ll discover an attention-grabbing set of metrics on Googlebot crawl exercise. These metrics are particularly helpful for web sites with hundreds or tens of millions of pages.

Googlebot ‘Crawl stats’

Way back, Google Search Console had easy-to-find metrics on Googlebot crawl exercise. Then, it seemingly disappeared.

In actuality, it was tucked away within the Settings part.

Methods to entry the crawl stats reviews:

- Click on on Settings on the backside of the left navigation.

- Go to the Crawl stats part.

- Click on Open report.

Concerning the crawl stats information

As Googlebot crawls your website, Google tracks and reviews on varied elements of Googlebot’s exercise and reviews on it in Google crawl stats.

That is the place you’ll discover high-level statistics about Google’s crawling historical past in your web site.

Google says this information is for superior customers

The Googlebot Crawl Stats information just isn’t for the technical search engine marketing rookies.

Google particularly says this information is aimed toward “superior customers” with hundreds of pages on their website, which can be why it’s situated in such an uncommon location, unseen by many within the search engine marketing group.

One purpose Google might understand this as a sophisticated report is that so many issues can affect these metrics, together with community points and cloud supply companies akin to Akamai.

Who will discover crawl stats most helpful?

I discover the Crawl Stats reviews much less of an “superior” set of reviews however one thing that’s extra helpful to enterprise SEOs with out crawler monitoring instruments akin to Lumar and Botify.

When doing search engine marketing on an enterprise web site with hundreds or tens of millions of pages, crawler optimization is a crucial activity, and crawler exercise metrics present necessary perception for outlining motion gadgets.

Smaller websites probably don’t want to fret an excessive amount of about crawler exercise as a result of there’s in all probability sufficient crawl finances allotted to your website to crawl at an applicable tempo.

However, enterprise websites are inclined to have way more pages that have to be crawled, found, and/or refreshed than Google crawls their website every day.

For that reason, they have to optimize for crawler exercise, which is a device to assist information subsequent steps.

What to search for on this information

After years of reviewing this information throughout many websites, I’ve one main rule:

- Don’t spend a whole lot of time right here until you see fluctuations and correlations.

Typically these reviews are attention-grabbing however not actionable.

Instance fluctuations that I have a tendency to analyze:

- HTML requests decreased (or spiked) on the identical time Bytes of JavaScript downloaded elevated (or spiked).

- Common response time elevated (or spiked) on the identical time the variety of HTML requests went down (or sudden fall).

- Whole obtain measurement elevated (or spiked), however the variety of HTML requests didn’t change.

- The p.c of requests for discovery (to find new URLs) will increase and the p.c of requests for refresh goes down; nonetheless, you didn’t launch new URLs on the positioning.

When to take a look at this crawl stats

Crawl stats are good to peruse (and log) not less than as soon as a month.

They’re particularly good to observe after main releases, akin to a platform migration or main redesign. It will allow you to rapidly perceive how Google is responding to your newly launched modifications.

Keep in mind: In case you have a bot monitoring device akin to Lumar or Botify, then this information isn’t as helpful as you’ll discover within the bot monitoring information supplied by these instruments.

Caveats concerning the crawl stats information

Many issues can affect Google’s crawl stats metrics past a traditional dev launch.

This implies the search engine marketing group, product supervisor(s) and developer(s) should suppose exterior the field when evaluating the fluctuations.

You have to contemplate what may have induced a rise or lower in Googlebot crawl exercise, not solely in your launch but in addition throughout the community and tech stack.

Modifications to one thing akin to Akamai may doubtlessly impression this information.

Log the crawl stats information in a spreadsheet

That is information I prefer to archive as a result of Google reviews such a small window of time.

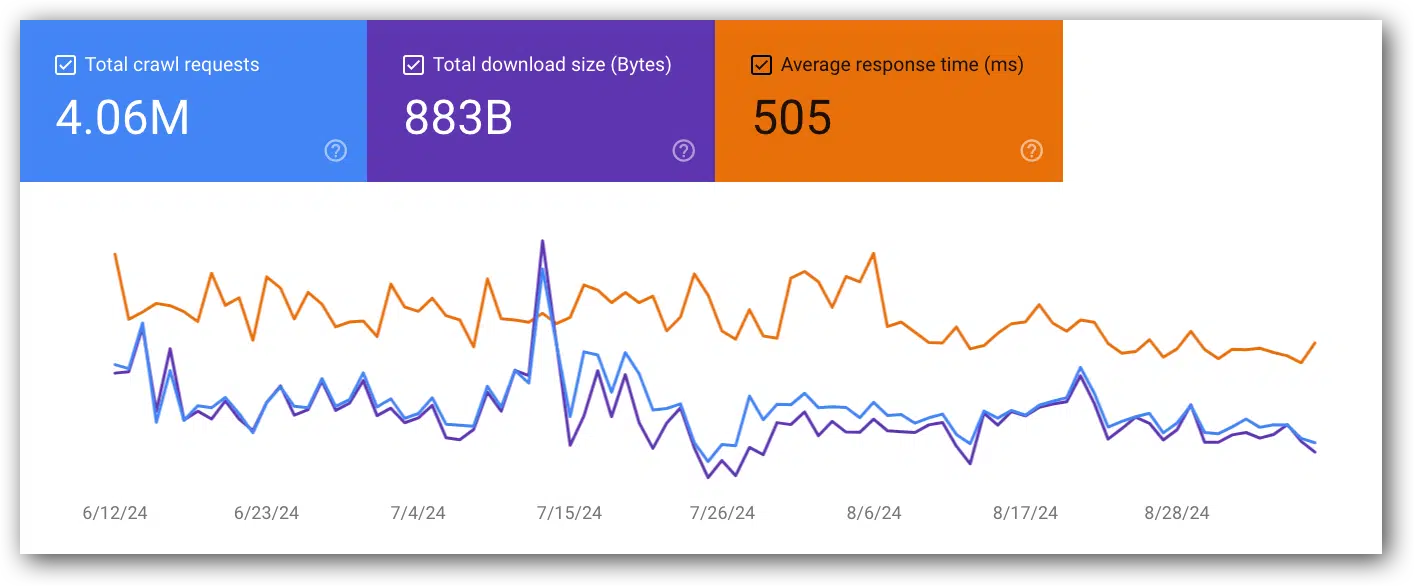

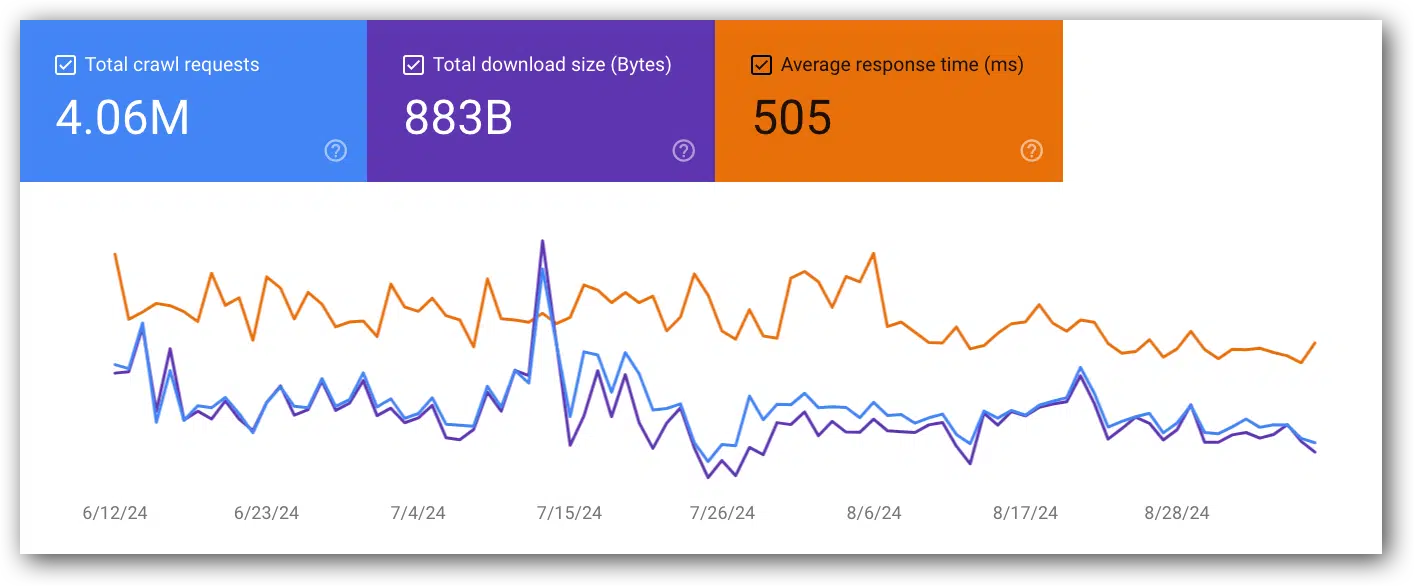

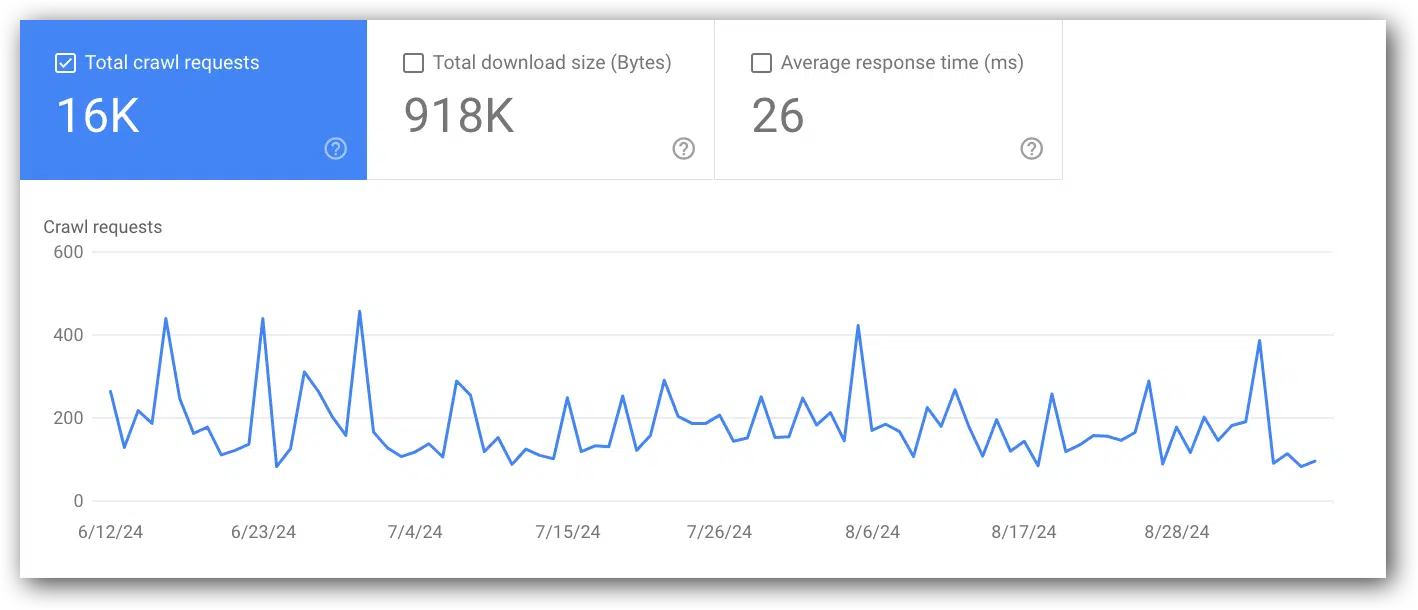

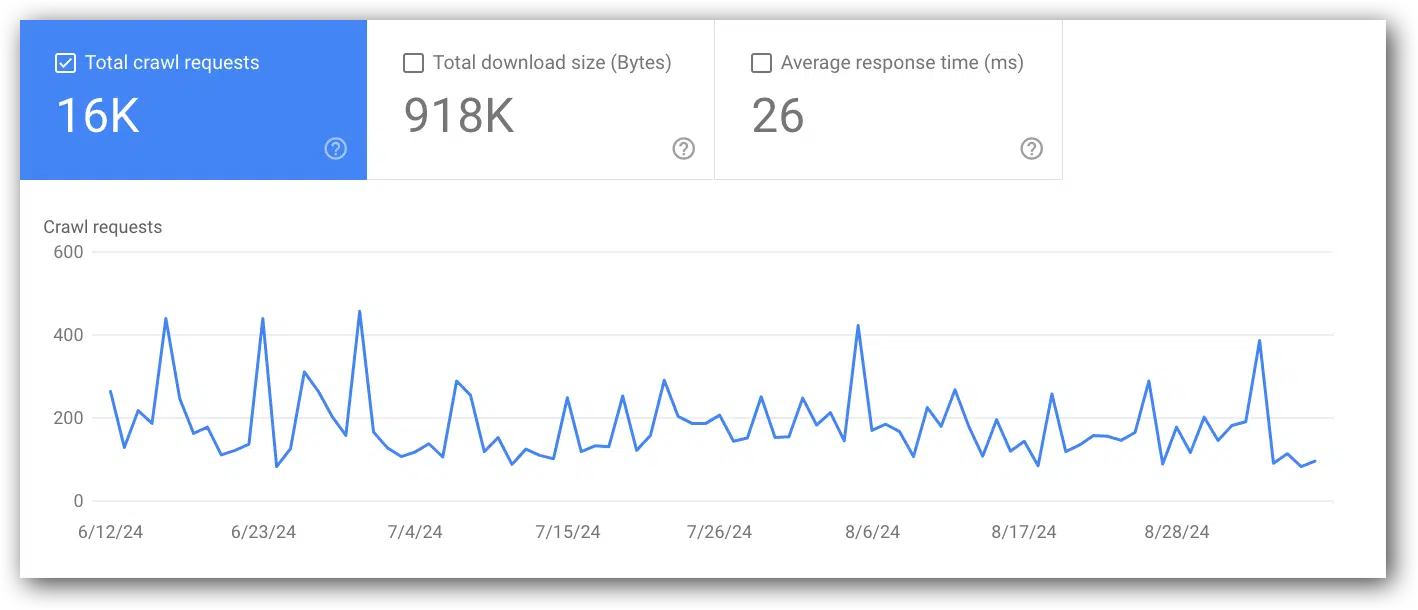

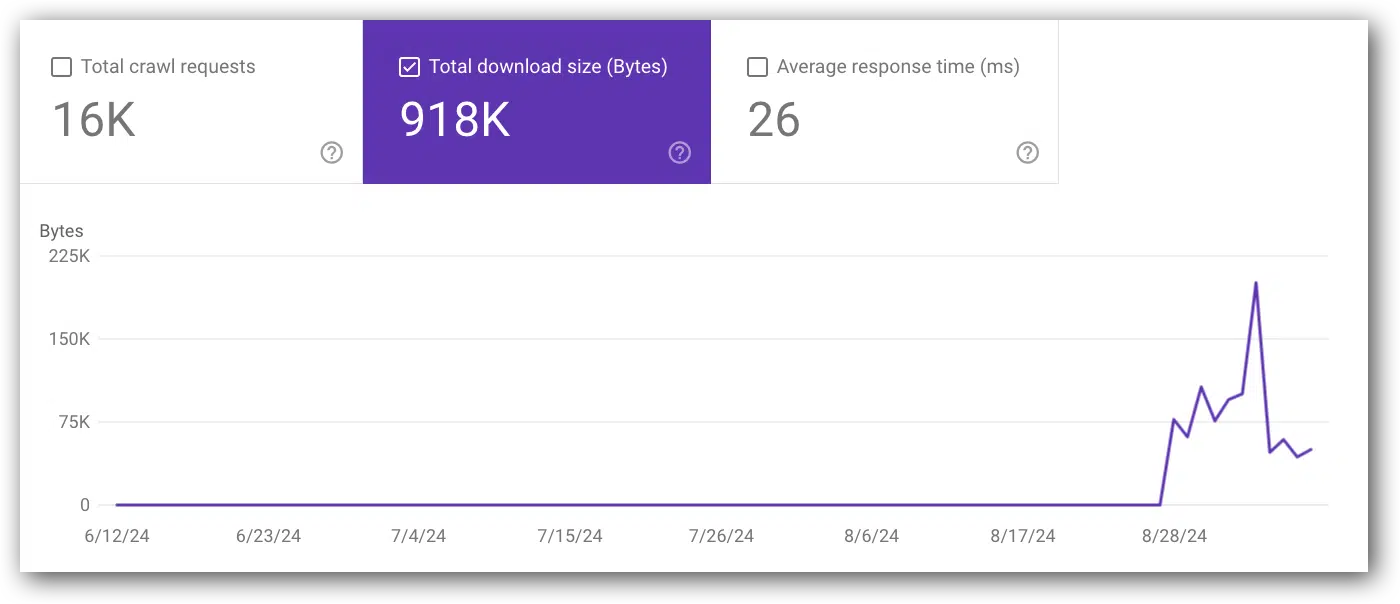

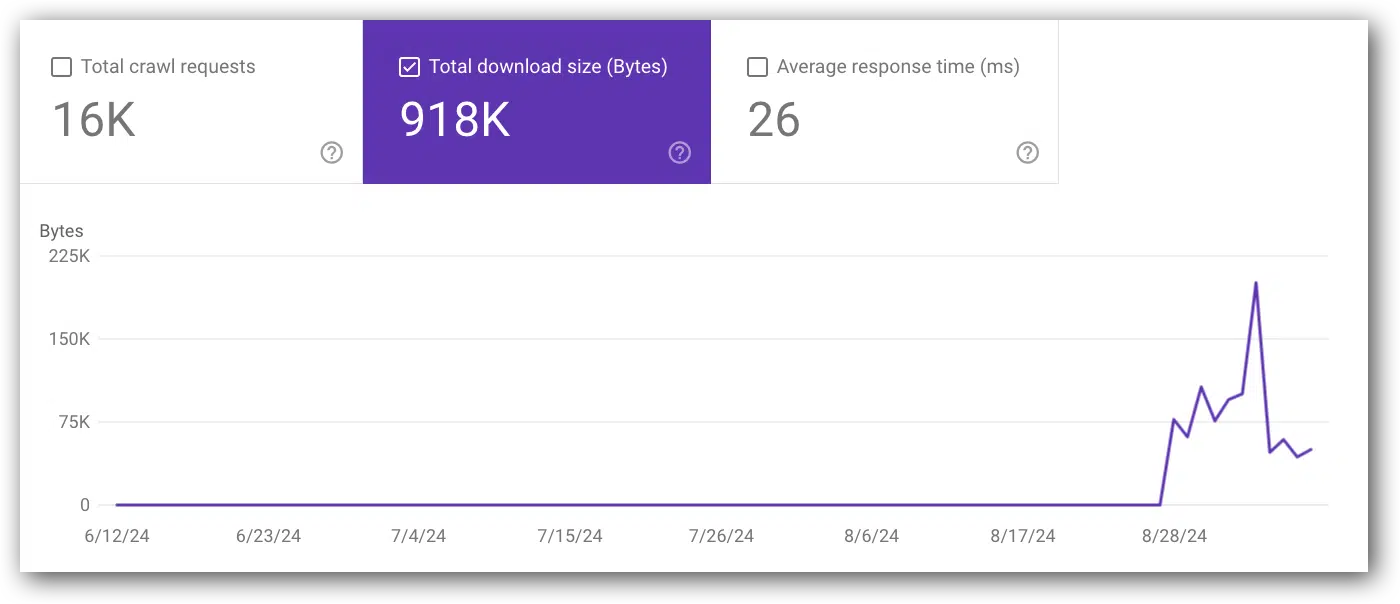

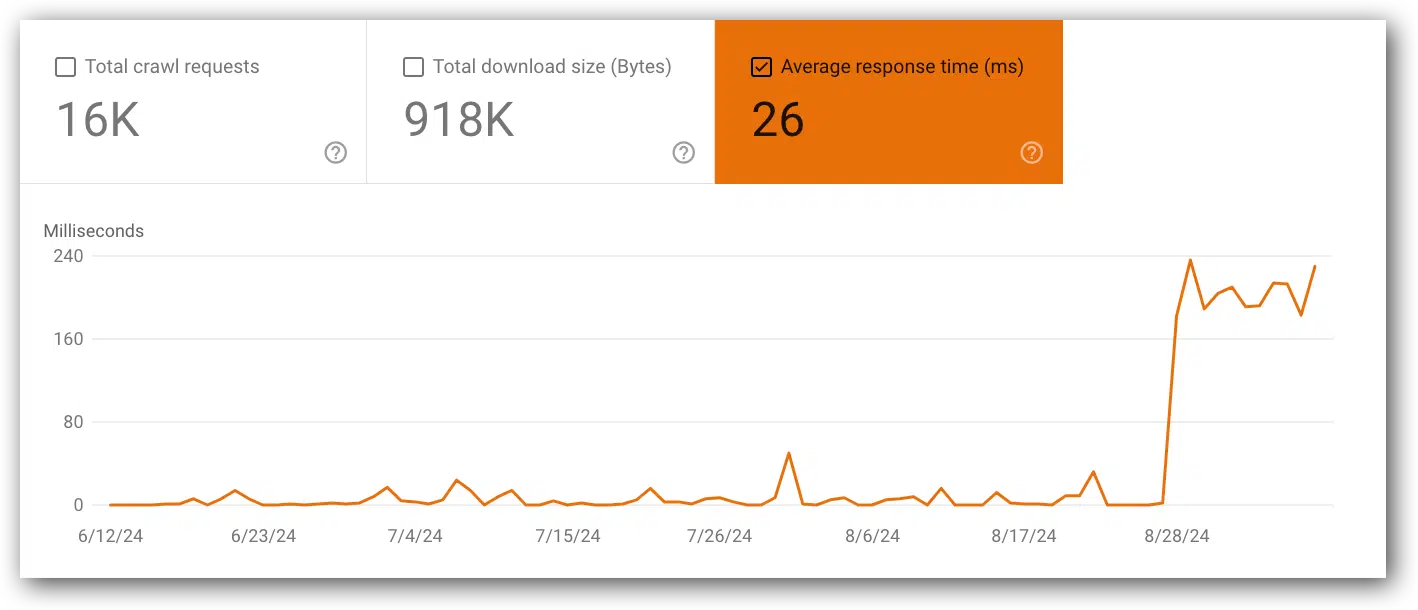

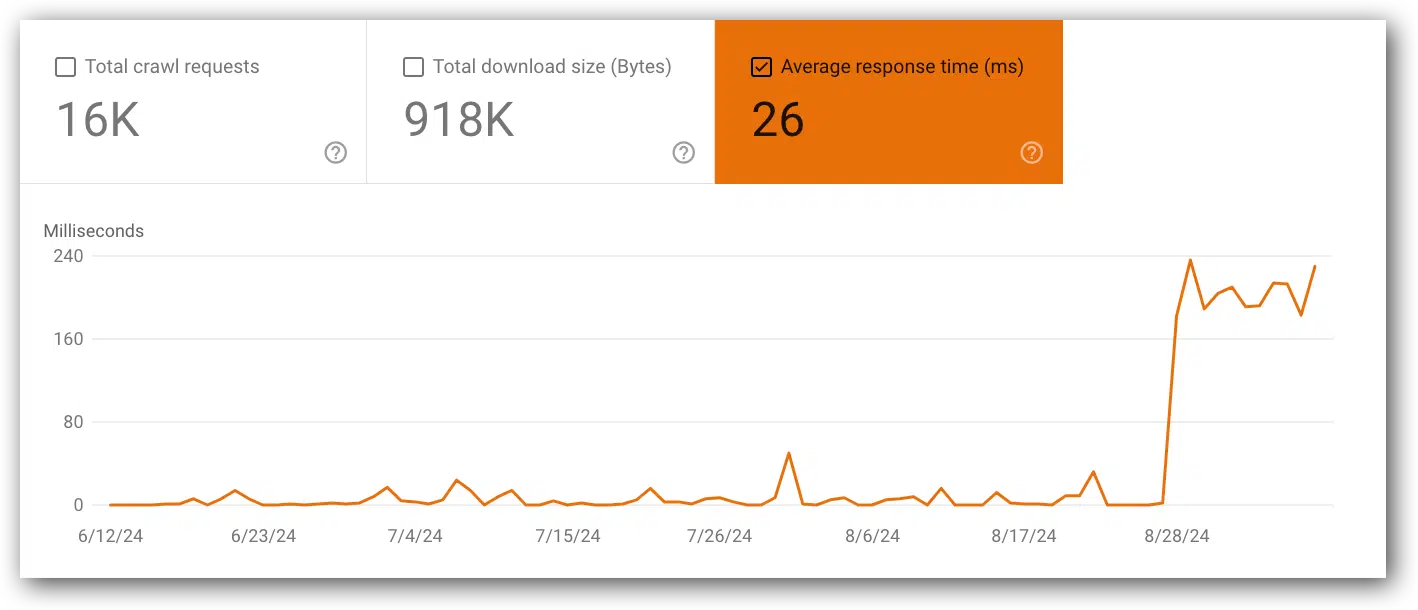

An incredible instance of it is a problem I’m going through now with a shopper. What’s reported in GSC proper now appears like issues are enhancing:

Nonetheless, as a result of I’ve metrics from six months in the past, I can say that these metrics are 40% larger than they have been six months in the past.

Whereas they’re trending down, they’re nonetheless worse than they have been previously. The shopper’s problem is that improvement has no thought why that is occurring (sadly, fixing that drawback is past the scope of this text).

You might suppose to simply seize a screenshot. Nonetheless, it makes it very arduous to match over time.

Discover there isn’t any left axis within the chart. You actually can’t inform what the strains replicate. (Notice: Numbers do seem on the left/proper axis if you find yourself solely viewing two metrics within the chart)

As a substitute, drop this information right into a spreadsheet. Then, you may have precise information that may be charted over time, calculated and used to match with different metrics, akin to visits.

Having the historic information in a single place is commonly helpful when discussing main modifications with improvement to point out how significantly better the metrics have been 4-6+ months in the past.

Keep in mind, improvement likes arduous, particular information, so charts with precise numbers on the left/proper axis (or worse, no numbers on the x-axis in any respect) might be extra helpful to you than charts with various numbers on the x-axis.

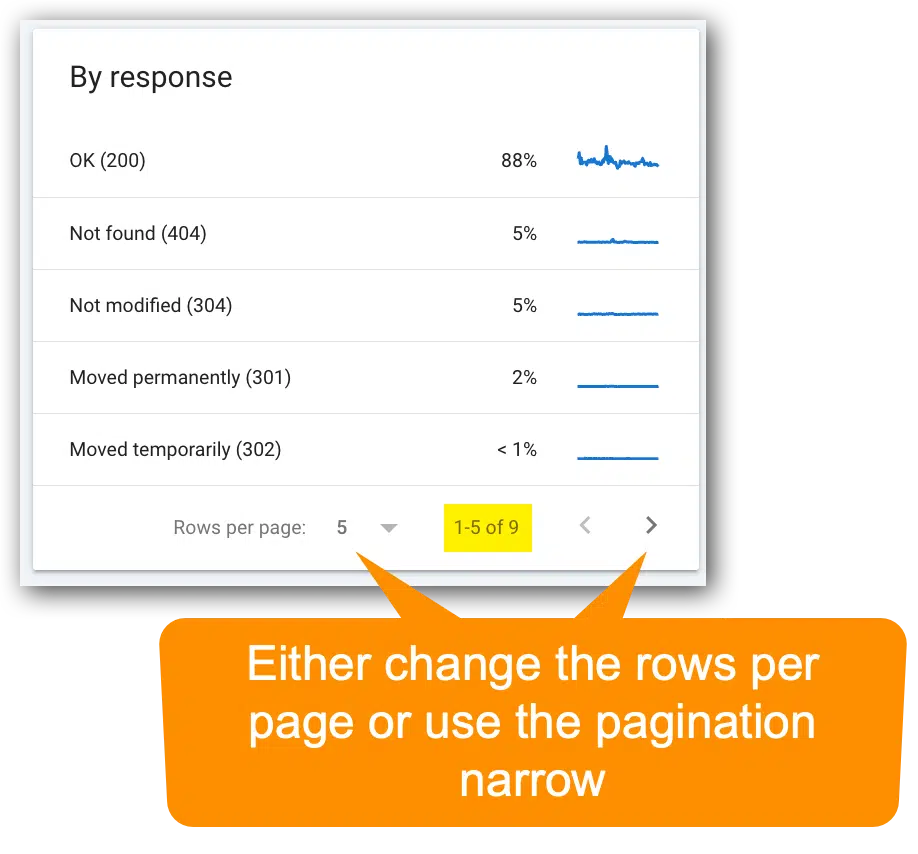

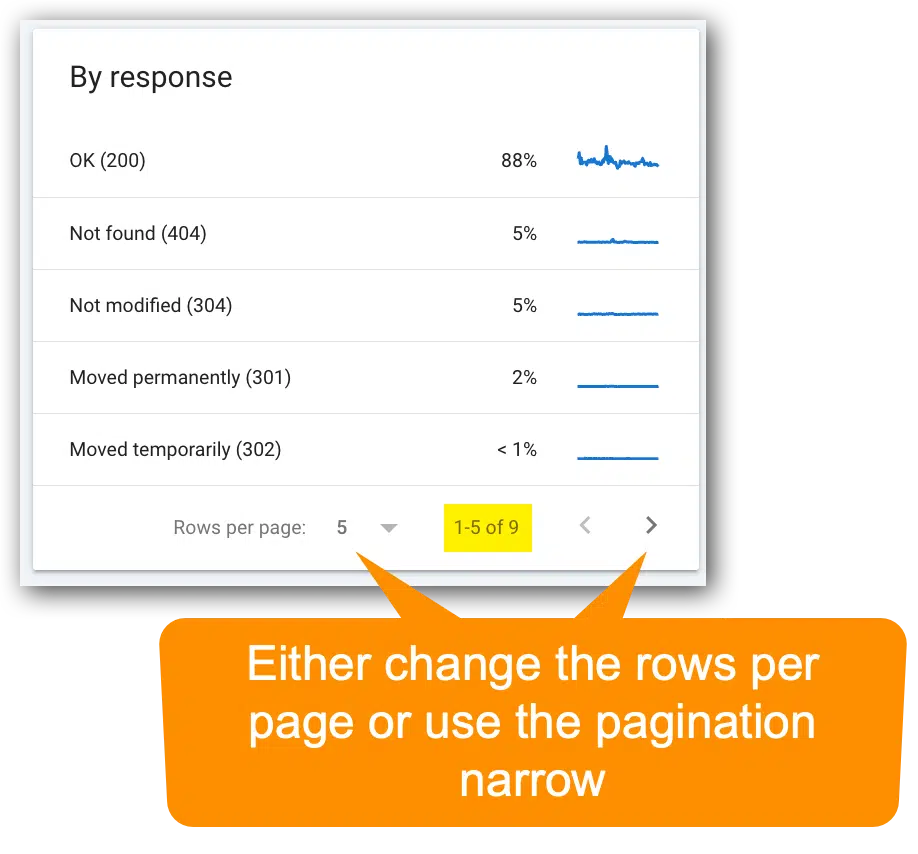

Keep in mind, the reviews packing containers are paginated

Although crucial metrics you’ll want are probably seen within the default view, most of the report sections are paginated – they usually’re simple to overlook!

Get the e-newsletter search entrepreneurs depend on.

Which metrics to observe and why

Let’s get into the first metrics to look (in a short time) every month, together with a couple of suggestions to remove motion gadgets from the information:

Whole crawl requests

- View this report in Google Search Console (situated within the high chart).

- Google definition: “The full variety of crawl requests issued for URLs in your website, whether or not profitable or not.”

- If this metric goes up or down, evaluate it with common response time and complete obtain measurement (bytes).

- An apparent purpose for this metric may go up in case you change a whole lot of code or launch a whole lot of new pages. Nonetheless, that’s certainly not the one trigger.

Whole obtain measurement (byte)

- View this report in Google Search Console (situated within the high chart).

- Google definition: “Whole variety of bytes downloaded out of your website throughout crawling, for the required time interval.”

- If this metric goes up or down, evaluate it with common response time

- An apparent trigger for this metric to extend is including a whole lot of code throughout hundreds of pages or launching a whole lot of new pages. Nonetheless, that’s certainly not the one trigger.

Common response time (ms)

- Google Search Console Report (situated within the high chart).

- Google definition: “Common response time for all sources fetched out of your website through the specified time interval.”

- If this metric goes up or down, evaluate with with complete crawl requests and complete obtain measurement (bytes).

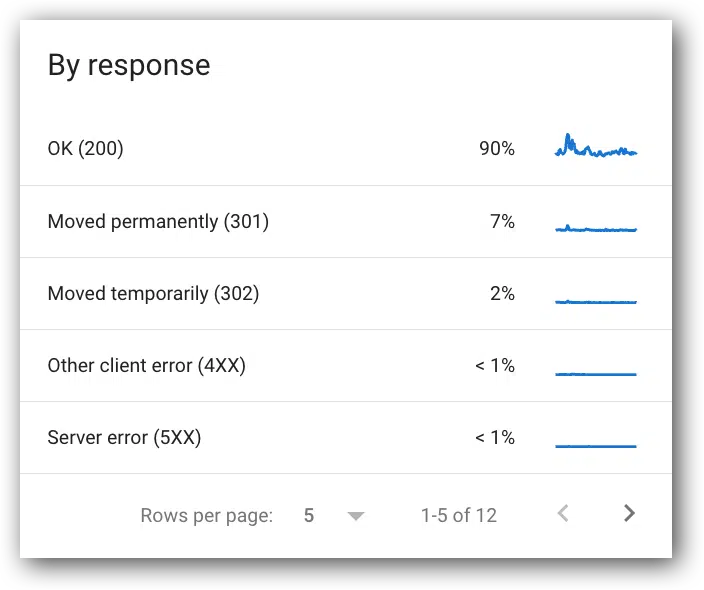

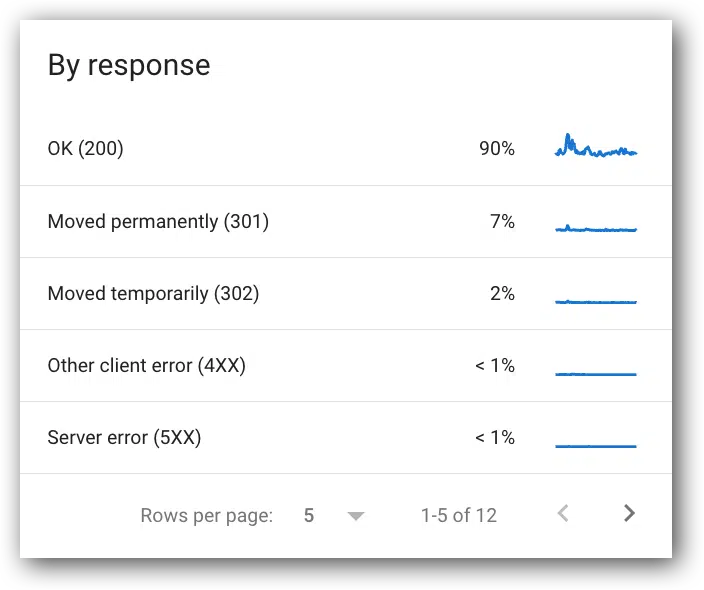

Crawl requests breakdown by response

- View this report in Google Search Console (situated beneath the highest chart).

- Google definition: “This desk exhibits the responses that Google acquired when crawling your website, grouped by response kind, as a proportion of all crawl responses…”

- Widespread responses:

- OK (200).

- Moved completely (302).

- Server error (5xx).

- Different shopper error (4xx).

- Not discovered (404).

- Not modified (304).

- Web page timeout.

- Robots.txt not obtainable.

- Redirect error.

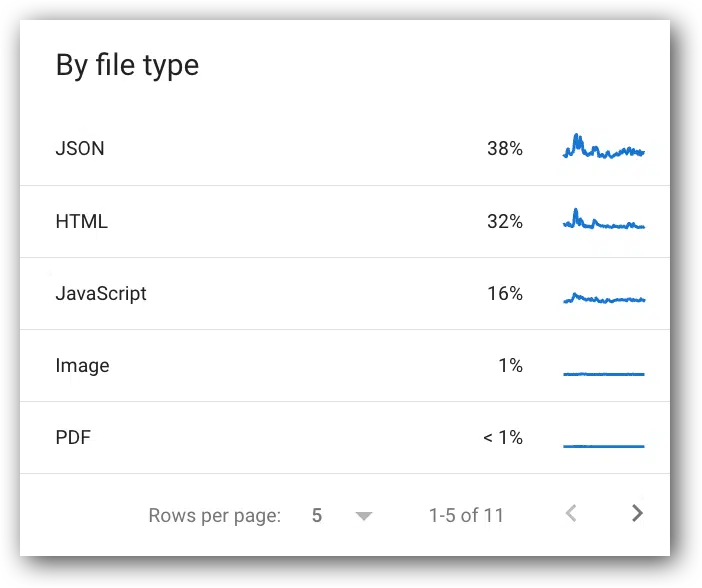

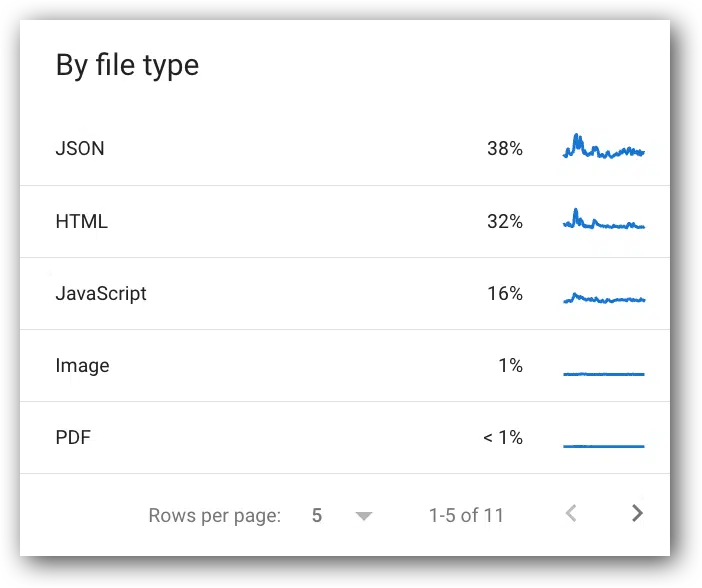

Crawl requests breakdown by file kind

- View this report in Google Search Console.

- Google definition: “The file kind returned by the request. Proportion worth for every kind is the proportion of responses of that kind, not the proportion of of bytes retrieved of that kind.”

- Widespread responses:

- JSON.

- HTML.

- JavaScript.

- Picture.

- PDF.

- CSS.

- Syndication.

- Different XML.

- Video.

- Different file kind.

- Unknown (failed requests).

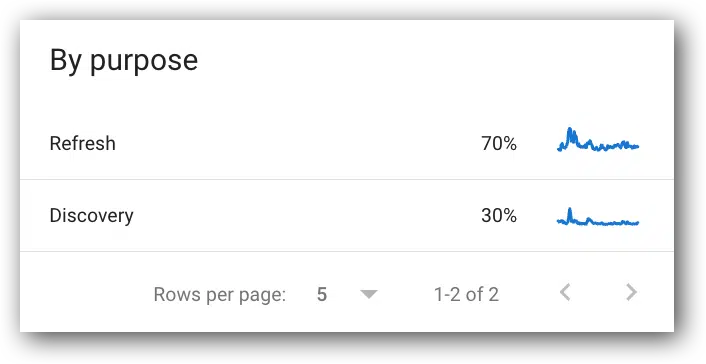

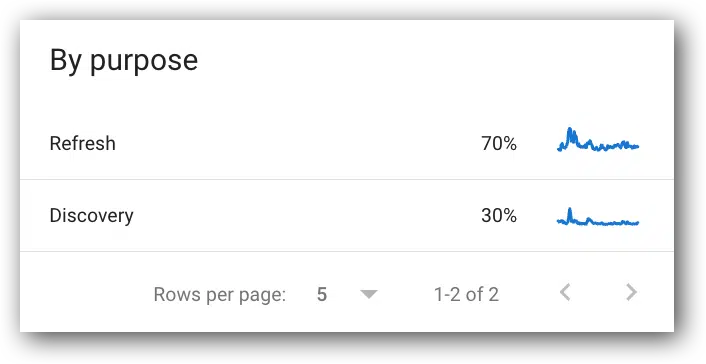

Crawl requests breakdown by crawl objective

- View this report in Google Search Console.

- Two functions:

- That is an attention-grabbing metric for shows; nonetheless, it solely has a couple of helpful use instances. For instance:

- If the p.c of Googlebot exercise that’s for Discovery all of the sudden will increase, however we’re not including URLs to the positioning, then you may have an motion merchandise to determine what’s being crawled that shouldn’t be crawled.

- If the p.c of Googlebot exercise that’s for Refresh decreases considerably, however you didn’t take away pages from the positioning, then you may have an motion merchandise to determine why fewer present pages are being crawled.

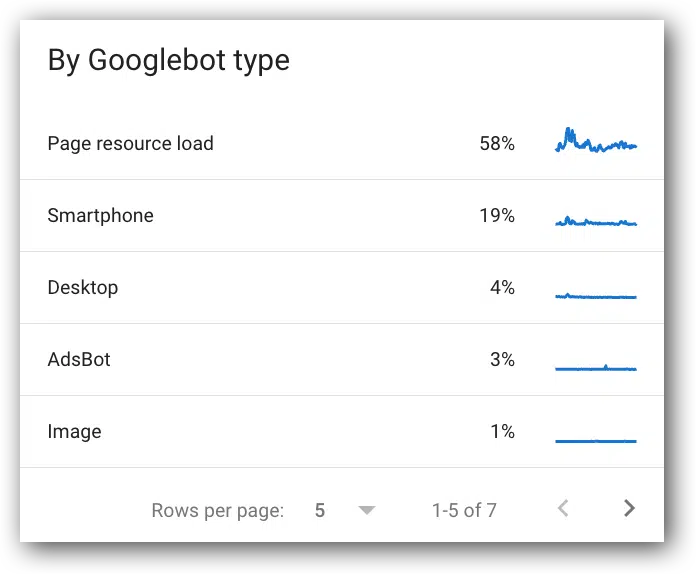

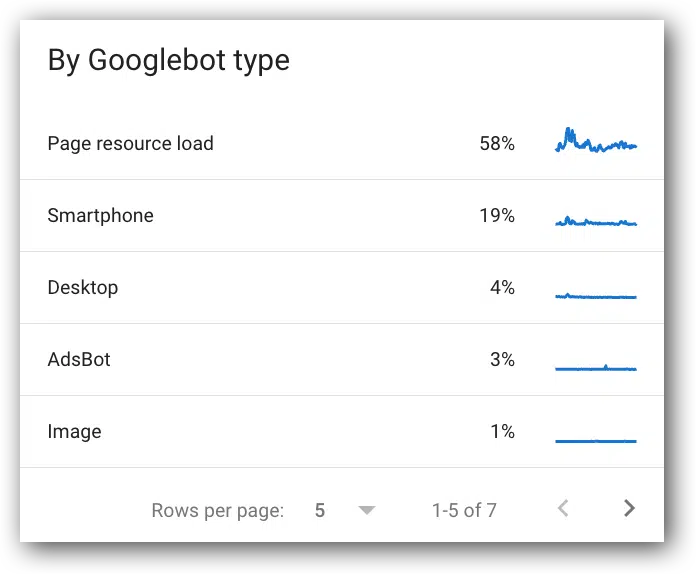

Crawl requests breakdown by Googlebot kind

- View this report in Google Search Console.

- Google definition: “The kind of person agent used to make the crawl request. Google has a lot of person brokers that crawl for various causes and have completely different behaviors.”

- It’s an attention-grabbing metric, however not very helpful. It simply exhibits Google continues to be utilizing their desktop crawler. Truthfully, I often ignore these metrics.

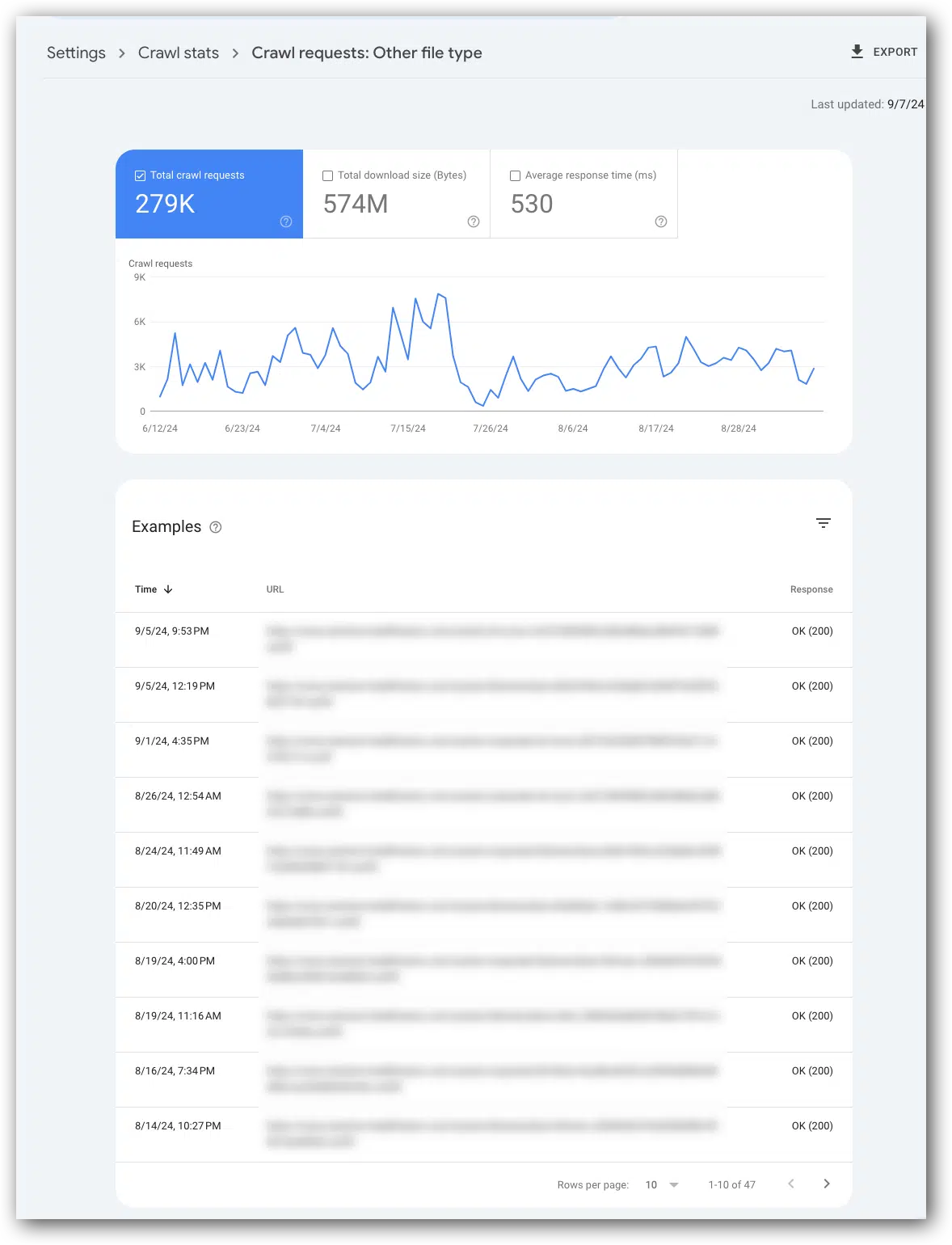

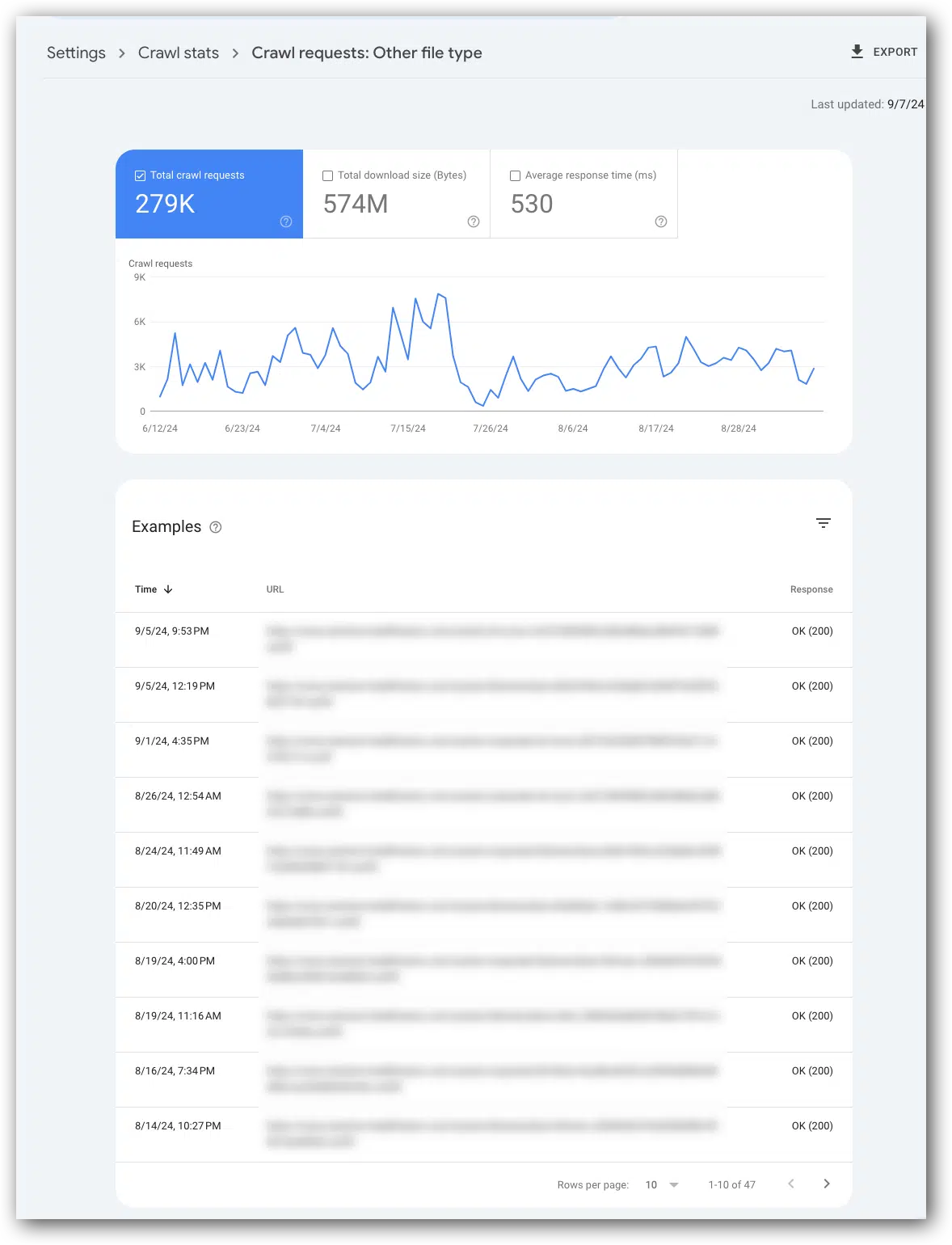

You’ll be able to click on into every metric for extra information

Typically if you current any search engine marketing concern to product managers and builders, they usually need to see instance URLs. You’ll be able to click on on any of the metrics listed on this report and get instance URLs.

An attention-grabbing metric to take a look at is “different file sorts” as a result of it’s not clear what’s within the “different file sorts” class (usually it’s font recordsdata).

The screenshot beneath exhibits the examples report for “different file kind.” Each file listed is a font file (blurred out for confidentiality causes).

On this report of examples, every row displays one crawl request. This implies if a web page is crawled a number of occasions it may very well be listed greater than as soon as within the “examples.”

As with all Google Search Console reviews, it is a information pattern and never each request from Googlebot.

Do you share these metrics with builders and product managers?

These metrics will sometimes generate one in every of two ideas:

- “There’s nothing to take a look at right here.”

- “What may have induced that?”

In my expertise, the solutions to “what induced that” are inclined to require the enter of product managers and/or builders.

When presenting the information and your questions on potential causes for points, keep in mind to obviously clarify that these metrics will not be person exercise and solely signify Googlebot’s exercise and expertise on the web site.

I discover product managers and builders usually get a bit confused when discussing this information, particularly if it doesn’t match up with different metrics they’ve seen or info they know concerning the website.

By the best way, this usually occurs for many Google Search Console information conversations.

If there aren’t any Crawl Stats fluctuations or correlations to be involved about, don’t deliver it as much as improvement, nor product administration. It simply turns into noise and prevents them from specializing in extra crucial metrics.

What’s subsequent?

Take a look at your crawl stats to ensure there aren’t any spikes or correlations which can be regarding.

Then, decide how usually you need to have a look at these and arrange programs that immediate you to examine these and different Google Search Console metrics in a scientific, analytical technique every month.

When you take a look at your Googlebot Crawl Stats, I’ll write Half 4 on this sequence that may discuss the best way to know which URLs you need to deal with for technical search engine marketing enhancements and specifically, Core Internet Vitals metrics.

Dig deeper

That is the third article in a sequence recapping my SMX Superior presentation on the best way to flip search engine marketing metrics into motion gadgets. Beneath are hyperlinks to the primary two articles:

Contributing authors are invited to create content material for Search Engine Land and are chosen for his or her experience and contribution to the search group. Our contributors work underneath the oversight of the editorial employees and contributions are checked for high quality and relevance to our readers. The opinions they categorical are their very own.